LHC experiments upgraded trigger plans

As the LHC prepares for higher energies and luminosities all four experiments have upgraded their triggering to record data more efficiently and make sure that their detectors are ready to find evidence for new physics. The trigger teams from the experiments describe the changes made between Run1 and Run 2 below.

ATLAS

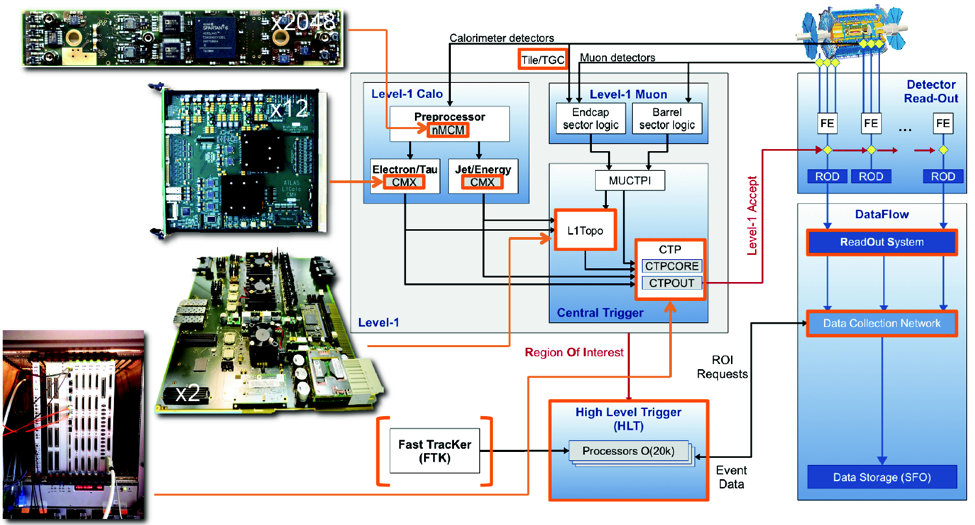

The trigger system is an essential component of any hadron collider experiment as it is responsible for deciding whether or not to keep a given collision for later study. In Run-1, the ATLAS trigger system operated efficiently at instantaneous luminosities of up to 8x1033 cm-2s-1 and collected more than three billion events used in more than 400 ATLAS publications. In Run-2 the increased collision energy, higher luminosity and higher pileup, will exceed the trigger rate capabilities of the Run-1 trigger system. The long shutdown was therefore used to improve the trigger system with almost no component left untouched. A schematic of the upgraded trigger and DAQ system can be seen below.

The capabilities of the first level, hardware-based trigger, were improved in several ways. The preprocessing of calorimeter signals for the Level-1 Calo trigger was made more flexible by replacing a custom ASIC with a modern FPGA in the Preprocessor. This new hardware allowed the use of auto-correlation filters and a new bunch-by-bunch dynamic pedestal correction, both of which help suppress pileup effects. Additional inputs were added to the Level-1 Muon trigger. To suppress most of the fake muon triggers, the muon endcap trigger (1.05<|η|<2.4) now requires a coincidence with hits from either the innermost muon chamber or from the outermost layer of the hadronic calorimeter. The trigger efficiency has been improved by up to 4% in the barrel region adding chambers in the so-called feet region of the muon system. The output merger modules for both the Level-1 Calo trigger (CMX) and the Level-1 Muon trigger (MuCTPi) were both upgraded to send all the trigger objects to a new topological trigger processor (L1Topo). The new L1Topo system enables the Level-1 trigger to combine kinematic information from multiple calorimeter and muon trigger objects. This new feature is used to trigger, for instance, on high mass di-jet pairs from vector boson fusion processes or on di-muons with a limited opening angle from B-hadron decays. This new trigger suppresses backgrounds by more than a factor of two. The main part of the central trigger processor (CTP) was replaced to allow for additional inputs from L1Topo and to double the amount of trigger selections that can be applied in parallel. The various upgrades in the L1 trigger hardware, as well as the detector readout, raised the maximum Level-1 trigger rate from 70 kHz in Run 1 to 100 kHz in Run 2.

There have been major upgrades in the higher level trigger (HLT) as well. In Run-1 the ATLAS trigger system had distinct Level-2 and Event Filter farms. For Run-2, these farms were merged into a single farm running a unified HLT process, that retains the on-demand data readout of the old Level-2 and to a larger extend uses offline based algorithmic code from the Event Filter. This new system reduces code and algorithm duplication and a more flexible HLT. The majority of the trigger selections were re-optimized during the shutdown to minimize differences with offline analysis selections, which in some cases reduced the trigger inefficiencies by more than a factor of two. The HLT tracking algorithms have also been prepared for inclusion of a new, fast hardware based tracking system (FTK) which will become operational during 2015. The average output rate of the HLT is increased to 1 kHz.

The new ATLAS trigger system has been commissioned using cosmic ray data and early 13 TeV collisions. It works efficiently and most of the new trigger components are used for the current Run-2 data taking, rendering ATLAS ready to efficiently select events for Run 2.

The ALICE Trigger and Improvements for Run II

The ALICE Trigger system has to handle the very different environments of both proton-proton and lead-lead collisions at the CERN LHC. The system consists of the Central Trigger Processor (CTP) and 24 Local Trigger Units (LTU) that act as a uniform interface to sub-detector front-end electronics. The CTP generates three levels of hierarchical hardware triggers - L0, L1 and L2. After a successful L2 trigger, sub-detectors are readout and the event is sent to the High Level Trigger (HLT) for further processing and selection.

At any time, the 24 sub-detectors of the ALICE experiment are partitioned into up to 6 independent clusters. Trigger selection includes the Past-future Protection - a fully programmable hardware mechanism of controlling event pile-up. A trigger physics class is the basic processing structure throughout the CTP logic and represents a particular physics signature, with a defined set of trigger input requirements. The CTP allows for up to 50 of these independently programmable classes to be active at any given time.

The L0 trigger must reach time-critical sub-detectors within 1.2 μs after an interaction. The L1 trigger follows at 6.5 μs and, finally, the L2 trigger is delivered at 100 μs after an interaction. The latency of the L2 trigger is governed by the drift time of the TPC. The short latency for L0 requires the CTP to be as close as possible to the sub-detectors. Even so, the CTP must send the L0 signal within 100 ns of receiving the last L0 input in order to achieve the required latency.

The LTUs serve as an interface between the CTP and the sub-detector readout electronics. One of the features of the LTU is its ability to run in stand-alone mode where can it fully emulate the CTP protocol. This mode has proved to be essential for sub-detector development and testing.

For Run 2, the current L0 CTP board has been replaced by new LM/L0 board. This new LM/L0 board incorporates a new pre-trigger (which is issued before the L0 trigger), increases the number of trigger physics classes from 50 to 100, increases the number of detector clusters from 6 to 8, and significantly increases the number of allowable trigger inputs. The improved trigger is working well and gives ALICE improved efficiency and more flexibility for the exciting physics potential of Run 2.

Developments in the LHCb trigger strategy

The LHCb trigger has been significantly improved between Run 1 and Run 2 – the detector is now calibrated in real time, allowing the best possible event reconstruction in the trigger, with the same performance as the Run 1 offline reconstruction.

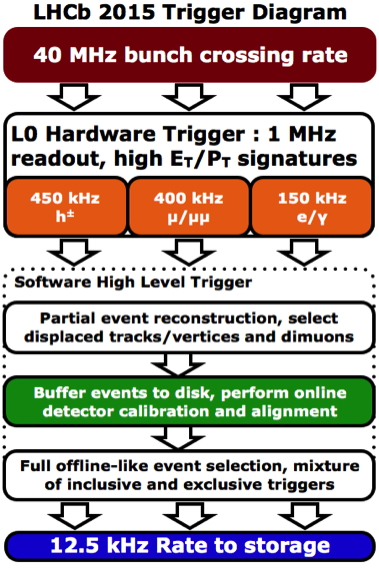

The trigger (see schematic, below) consists of two stages: a hardware trigger, reducing the 40 MHz bunch-crossing rate to 1 MHz, and “high-level” software triggers (“HLT1” and “HLT2”). The capacity of the machines running the HLT has roughly doubled since Run 1.

In HLT1 a quick reconstruction is performed before further event selection. Dedicated inclusive triggers for heavy flavour physics use multivariate approaches. An inclusive muon sample is also selected, and exclusive lines select specific decays. HLT1 typically takes 35 ms/event, writing out events at about 150 kHz.

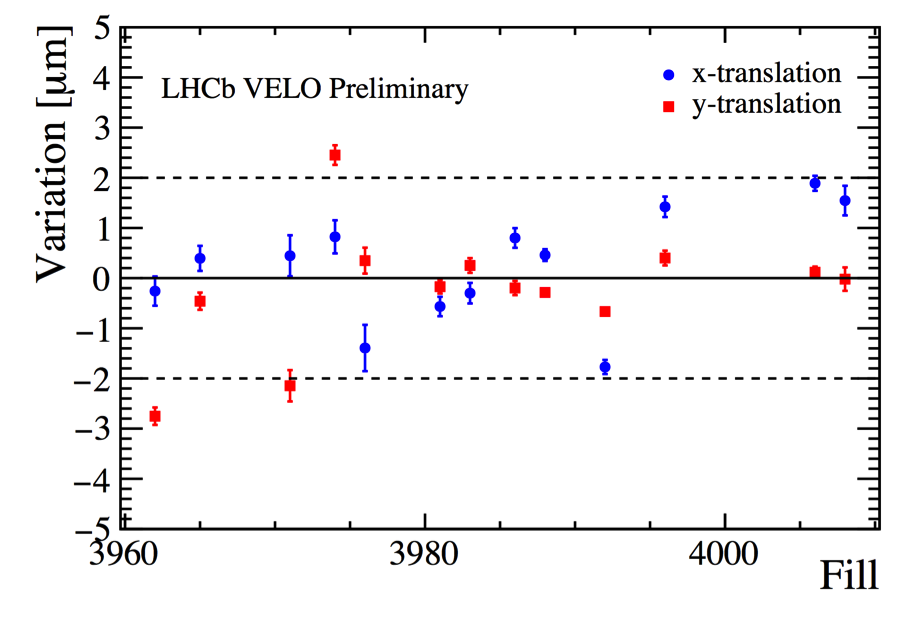

In Run 1 20% of events were deferred and processed with the HLT between fills. For Run 2, all events passing HLT1 are deferred whilst a real-time alignment is run, minimising the time using suboptimal conditions. The spatial-alignments of the vertex detector (“VELO”) and tracker systems are evaluated in a few minutes at the beginning of each fill. The VELO is reinserted for stable collisions in each fill, so the alignment could vary fill-by-fill (the variation is shown below for the first Run 2 fills). In addition, the calibration of the Cherenkov detectors and the Outer Tracker are evaluated for each run. The quality of the calibration allows the offline performance (including the offline track reconstruction) to be replicated in the trigger, reducing systematic uncertainties on LHCb results.

The second stage of the software trigger, HLT2, writes out events for offline storage at about 12.5 kHz (compared to 5 kHz in Run 1). There are nearly 400 trigger lines: beauty decays are typically found using multivariate analysis of displaced vertices. There is also an inclusive trigger for D* decays, and many lines for specific decays. Events containing leptons with significant transverse momentum are also selected.

A new trigger stream (the “turbo” stream) allows candidates to be written out without further processing. Raw event data is not stored for these candidates, reducing disk usage. All this enables very quick data analysis: LHCb has already used data from this stream for a preliminary measurement of the J/ψ cross-section in √s = 13 TeV collisions.

The LHCb trigger now allows event selection at a higher rate and with better information than in Run 1: a significant advantage in the hunt for new physics in Run 2.

The author would like to thank David Evans (ALICE), Brian Petersen (ATLAS) and William James Barter and Vladimir Gligorov (LHCb) for their valuable contributions on which this article is based.