Interview with René BRUN

Rene joined CERN in 1973. While working with C. Rubbia at the ISR he developped the HBOOK package still in use today. In 1975 he was in charge of the simulation and reconstruction software for NA44 where GEANT1 and GEANT2 were created. In 1981, he joined OPAL at LEP, creating the GEANT3 detector simulation system, also pioneering the introduction of the first workstations like Apollos in Europe. In 1984 he coordinated the development of the PAW (Physics Analysis Workstation) data analysis system and until 1994 he was in charge of the Application Software group in the computing division. In 1995 he created the ROOT system while working for the NA49 heavy ion experiment at the SPS. Rene has been leading the ROOT project from 1995-2011. Rene is still active and following his recent retirement he has the chance to work on his own project on Physics models.

How did you start your career at CERN?

R.B. The first time that I came at CERN was in June 1971 as a summer student. I worked in the SC33 nuclear physics experiment at CERN and between 1971 and 1973 I pursued my thesis on this experiment. This was a very intense period in my life. Every two weeks on Friday, after my classes I was catching a train from Clermont Ferrand, where my home university was, to Geneva and then a bus from Cornavin to CERN where I was spending my whole weekend working in front of a computer until Monday morning when I was leaving back to Clermont Ferrand .

Once my thesis completed, in July 1973 I was hired by the DD division for developing special hardware processors. This was in the context of the ISR experiment R602 and the idea was to do the full reconstruction of data online. However, this system never worked well and after 3 months I started developing the same thing in software; including an histogram package called HBOOK which was a big success that was soon used by other experiments. In fact after almost forty years there are still people in different groups using this software and I think this is a huge success.

In 1974, Carlo Rubbia asked me to join the NA4 experiment, a deep inelastic muon scattering experiment in the North area. I worked on simulation and reconstruction both for the online and offline where we were using the NORSK-DATA50 and CDC7600 machine for the reconstruction. During that period, we developed the GEANT1 and GEANT2 between 1974 and 1978 while at the same time we were extending the HBOOK system by integrating more features.

When did you come up with the GEANT3 software and the development of the PAW system?

Following my work in NA4, I joined OPAL in 1980. That was the time when we launched the idea of the GEANT3 package which is still used by ALICE and many other experiments. The main attraction in the new GEANT3 was the geometry package. From my past experience in NA3, NA4, Omega and various other experiments I realized the need for integrating a geometry package that will describe each of the detectors of the experiment in the software. In GEANT1 and GEANT2 we had to modify the code by including numerous "if" statements each time that we were modifying the detector and that was a kind of nightmare both for computer specialists but also for the physicists who were using the simulation package.

I was fortunate to meet Andy MacPherson who wrote the algorithms for the geometry while I developed the infrastructure. GEANT3 developed significantly between 1980 and 1983 and was initially used by all LEP experiments except DELPHI and later by other experiments around the globe.

Following the success of GEANT3 we started the development of the Physics Analysis Workstation Package (PAW); an interactive data analysis system. You have to bear in mind that in 1982 we had the first Apollo workstations that allowed a fast development cycle and a nice user interface and that’s how we came up with the idea of developing PAW.

The first versions of PAW included a data structure called ntuples that was getting increasingly popular. The original ntuples were so called row-wise-ntuples. They were simple tables like in relational data bases. We rapidly discovered that this format would not scale for handling larger data volumes. In 1987 we introduced the Column-Wise-Ntuples allowing to process efficiently both a larger data volume and a rapid access to a subset of the columns. PAW became the standard data analysis system during the LEP era, being also widely spread in the HEP and NP world.

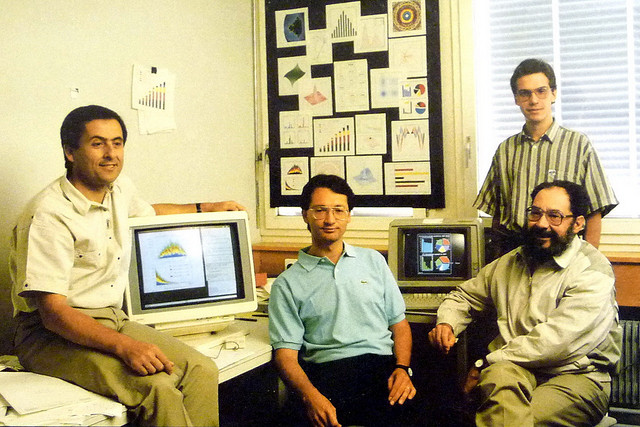

Rene Brun and the PAW team @CERN in 1989

When was the first time that you were involved in developing software for the LHC experiments?

Since 1987 I was deeply involved with GEANT3 in the simulation of the SSC detectors GEM and SDC.

We were adding more and more features in the geometry and physics packages. Between 1991 and 1994 I contributed to the simulation of ATLAS ans CMS, and I still remember very well the first time when we were able to show a complete simulated event in these detectors.

Between 1990 and 1992 I had Tim Berners Lee in my group developing the first basic Web services on his very advanced NEXT work-station using object oriented software. At the same time many discussions were going on on the future of the HEP software. Many were proposing to continue with Fortran, others to embark in new languages like Eiffel, others to stop developing in house products and buy commercial systems.

In 1993 I proposed an evolutionary model for the LHC software where the GEANT3 geometry sub-system was replaced by an object oriented implementation and everything managed by our old good ZEBRA data structure and Input/Output system was replaced by a ZOO system supporting the OO model. ZEBRA was at the heart of GEANT3 and with PAW working very well, I thought that it was a wise idea to capitalize on working things, replacing/upgrading the main components one by one. However, this proposal was rejected in the end of 1993.

Did you understand why your proposal was rejected?

R.B. Since a few months, it was becoming clear that the SSC was in big troubles and it was stopped early in 1994. A lot of young and not very experienced people were becoming free to investigate alternative lines looking more appealing than the proposals of the old faces. The general trend was that we should stop developing in-house products like PAW. In particular for the databases many people supported the idea of using commercial systems for handling the large amount of data that were expected from the operation of the LHC. There were many candidates at that time like O2, ObjectStore and Objectivity. A lot of time was spent in designing bubbles and no code. Very unclear milestones were presented. The fact that the management was not familiar with the new techniques did not help. Sometimes there is a confusing gap between the hopes generated by a new technology and the lack of experience in managing a new project based on these technologies.

How did you decide to return and get involved in software development for the LHC experiments?

R.B. This was a difficult period and I decided that I should spend some time in a different project in order to think more clearly about my next steps. A new experiment, NA49 seemed like a great chance for me since it was based in Prevessin and could offer me the peaceful environment I needed. As you may know, NA49 was a heavy ion experiment and they were looking for someone to develop the software for their analysis. At that time I couldn’t have asked for a better opportunity. I felt that many people were ready to move from FORTRAN to C++, a new language for many people. If I may say one could feel the “spirit” that was gradually dominating in favour of this step. Since my original proposal had been rejected, I had some time to practice and understand in very practical terms what where the implications of moving to a new language, the implications of object orientation. In these conditions, you must question yourself on the best way to rethink a proposal with more and more experience with the new technologies. In 1995, after a lot of prototyping, I was ready to launch the ROOT project. I was lucky to embark Fons Rademakers in this adventure. During many years we had collaborated on the PAW project. During 1995 we worked very hard to develop an interesting prototype that we demonstrated in November 1995. At the same time we were witnessing the increasing number of problems in the alternative but official development lines. I got immediately convinced that the solution with Objectivity could not work, because having no support for an automatic class schema evolution system or dumping objects from memory to disks. It still remains a big mistery to me why this Objectivity line had not be stopped earlier. But you know, once you are convinced that the solution of your competitors cannot work , it multiplies your energy by several orders of magnitude.

We were working really hard in this project from 1995 to 1997 although we had the entire world against us! However, we were lucky since in 1998 Fermilab published a call for proposals for new software that could be used for the new experiments. In particular they were looking for proposals that would attack the problems that they had in the past with their database and interactive data analysis. I recall that they came up with a long list of detailed questions which we had to answer in order to demonstrate that our software corresponded to the physical and technical requirements of their experiments. We finally submitted a proposal and in September 1998 during the CHEP conference in Chicago they announced that ROOT was chosen.

This decision surprised everybody and in a sense “forced” the CERN management to provide us with more support in developing the ROOT software. In the beginning we got two fellows working in our team and then gradually we had up to 12 people working on the project. ROOT finally became the standard package for all the LHC Experiment in 2006-2007.

The ROOT team in 1997: Fons Rademakers, Valery Fine, Masaharu Goto, René Brun and Nenad Buncic.

Which are the main future challenges for ROOT?

R.B. After spending 20 years of my career in the development of ROOT I recently passed the control of ROOT to Fons Rademakers. Within the project Axel Naumann has the important responsibility to develop a new interpreter CLING that will replace the current CINT interpreter. CLING is developed in collaboration with other large companies like Apple and Google. CLING will move us to the new version of C++11 and will also provide many features making ROOT even better and faster. We are still left with many challenges in the I/O sub_system where an efficient use of parallel architectures is becoming a must. It was my firm decision that I shouldn’t interfere with the development of the new project and that new people should bring fresh ideas.

However, let me take this opportunity to share two thoughts that I often repeat to my colleagues. I think the main challenges in working with long-established software like ROOT are the large inertia that has been developed and the large amount of data; when you are dealing with petabytes of data you need to think twice before changing anything in the system.

The main goal is to do physics and not computing. Especially for a successful program like ROOT, someone could spend his entire professional life in developing this software. In that sense he might feel uncomfortable to propose a different approach or develop new software. I think this may be a danger. I keep telling people that they should be motivated to spend a small fraction of their time in investigating problems outside of their work area. For example some people are more interested in statistics while other in graphics interface or other fields and they should all have some freedom to work on these fields or even start a new project.

Finally, another big challenge that you are probably aware is the issue of parallelism. We need to be very fast and determined because otherwise the price that we have to pay for this change will become higher. This requires a lot of development and many changes of philosophy. In particular the software for transporting particles through layers of a detector must be completely rethought to really take advantage of parallel architectures.

Did you name ROOT? How did you come up with this name?

R.B. The choice of a name for a project is important. In 1995 we were entering the era of Object Oriented programming, so the OO seemed to be a must. The R was a signature combining the 2 main authors (Rene and Rdm, Rdm being the short cut for Fons email address. So the name meant Rene and Rdm OO Technology. However , the name also meant that the system was designed to be the base framework (the roots of a tree) for a lot of more software to come, including the experiment software.

Which are the main factors that drove success all these years?

R.B. First of all, I think that it was the hard work and our dedication to what we were developing at that time despite the hard moments that I encountered like many people in my career.

Secondly, I think that a very important point was the instantaneous support that we were providing to the users. I often say that if you answer a question in one hour you get ten points, after one day only one point and after you get a minus ten points. I knew by experience that an instantaneous response philosophy was vital for the success of a project. This was not necessarily the case for the other competing and official projects. Even after 18 years of management of the ROOT project, I had to insist sometimes with collaborators who were privileging writing software to user support. My experience is that user support is hard but also very gratifying because you really feel that you understand better the problems.

We succeeded in building a set of coherent libraries providing a complete replacement for the old CERNLIB. Users know that once in ROOT, a set of functions is maintained, developed and available on all platforms.

Finally, and probably the most important reason for the success was our clear understanding from the beginning that it was mandatory to support any C++ objects in the I/O subsystem and its evolution in time. If you take ATLAS or CMS, they have both well above 20000 classes of objects. With so many developers and users, classes are continuously evolving. It is mandatory to be able to read objects collections written 5 years ago, but also collections of objects of these evolved classes in an automatic and efficient way. This has been a huge amount of work. Most if not all commercial companies failed in providing an equivalent service. We succeeded in developing and testing this service first with the Tevatron and RHIC experiments with real data, then with additional developments and consolidation for the LHC experiments.

From your experience what would be your advice to young people who are now in the beginning of their career?

I see many young and motivated people. Motivation, enthusiasm and dedication to work are the key ingredients. You must be able to come rapidly with something essential in your profile indicating that you are able to innovate, make constructive criticisms for your projects; You must be able to communicate very well (oral and written), contributing to increase the team spirit. You must document your work with the idea that somebody else maintaining your work years later will be impressed by your ideas, your implementation and your description. Do not write code just for yourself, follow the team guidelines and respect the milestones. Always project yourself 5 or 10 years ahead to imagine yourself, your project, your environment, the context with users and Physics.