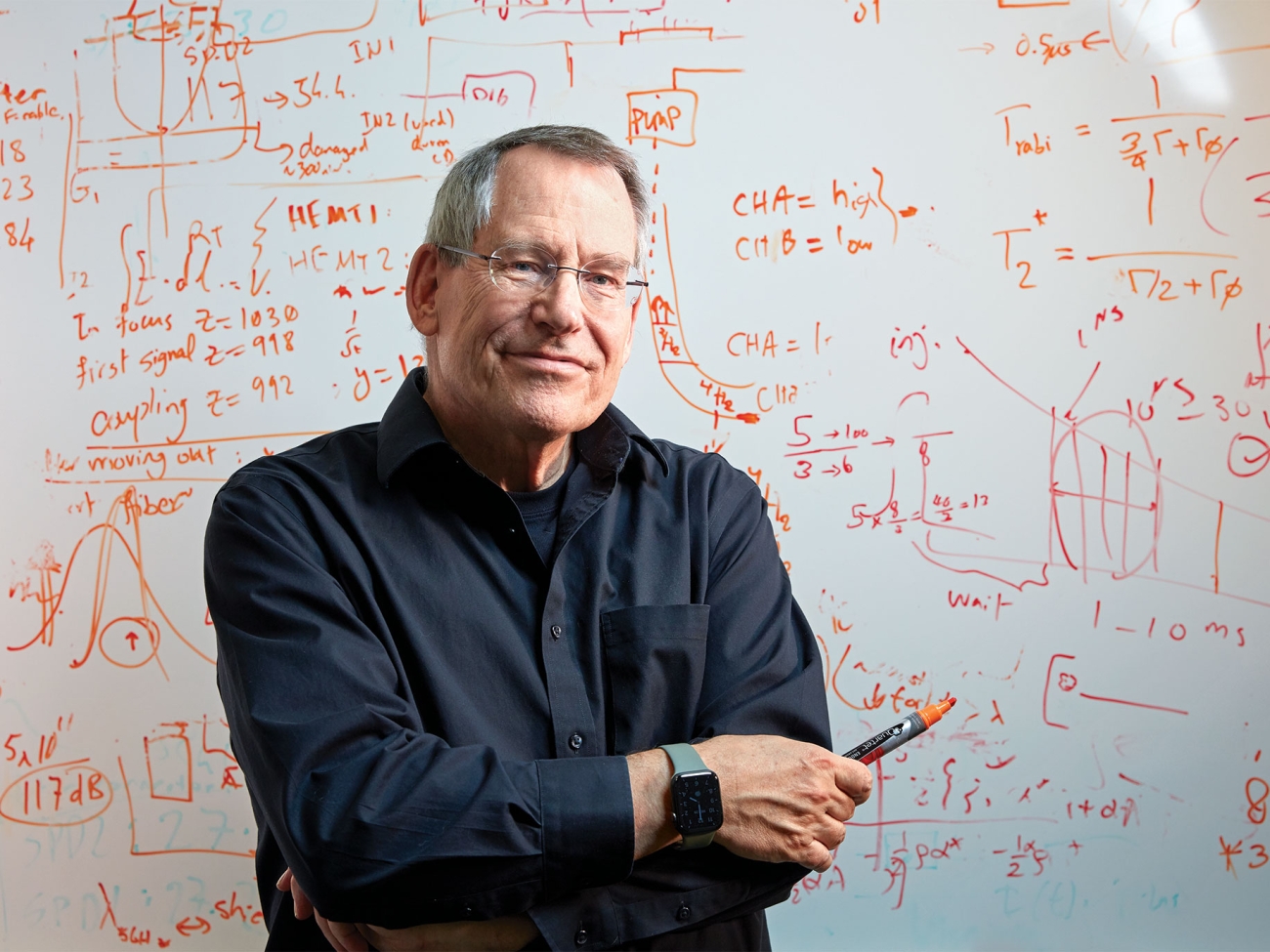

In-Depth Conversation with John Preskill

In this exclusive interview, we sit down with John Preskill, a pioneering physicist and leading expert in quantum computing. As a professor at Caltech and the director of the Institute for Quantum Information and Matter, Preskill has been at the forefront of groundbreaking research that promises to revolutionize technology as we know it. With a rich background in particle physics and fundamental physics, he brings a unique perspective to the conversation. Join us as we delve into his insights on the current state of quantum computing, its potential applications, and what the future holds for this rapidly evolving field (Cover Image: Gregg Segal).

Let's start with your journey. What led you to quantum physics? Was there a defining moment that drew you to quantum information science?

You could call it a Eureka moment. My generation of particle theorists came along a bit late to contribute to the formulation of the Standard Model. Our aim was to understand physics beyond the Standard Model. But the cancellation of the Superconducting Super Collider (SSC) in 1993 was a significant setback, delaying opportunities to explore physics at the electroweak scale and beyond. This prompted me to seek other areas of interest.

At the same time, I became intrigued by quantum information while contemplating black holes and the fate of information within them, especially when they evaporate due to Hawking radiation. In 1994, Peter Shor's algorithm for factoring was discovered, and I learned about it that spring. The idea that quantum physics could solve problems unattainable by classical means was remarkably compelling.

I got quite excited right away because the idea that we can solve problems because of quantum physics that we wouldn't otherwise be able to solve, I thought, was a very remarkable idea. Thus, I delved into quantum information without initially intending it to be a long-term shift, but the field proved rich with fascinating questions. Nearly 30 years later, quantum information remains my central focus.

Can you elaborate on how quantum information science and quantum computing challenge conventional understandings of computation?

Fundamentally, computer science is about what computations we can perform in the physical universe. The Turing machine model, developed in the 1930s, captures what it means to do computation in a minimal sense. The extended Church-Turing thesis posits that anything efficiently computable in the physical world can be efficiently computed by a Turing machine. However, quantum computing suggests a need to revise this thesis because Turing machines can't efficiently model the evolution of complex, highly entangled quantum systems. We now hypothesize that the quantum computing model better captures efficient computation in the universe. This represents a revolutionary shift in our understanding of computation, emphasizing that truly understanding computation involves exploring quantum physics.

How do you see quantum information science impacting other scientific fields in the coming decades?

From the beginning, what fascinated me about quantum information wasn't just the technology, though that's certainly important and we're developing and using these technologies. More fundamentally, it offers a powerful new way of thinking about nature. Quantum information provides us with perspectives and tools for understanding highly entangled systems, which are challenging to simulate with conventional computers.

The most significant conceptual impacts have been in the study of quantum matter and quantum gravity. In condensed matter physics, we now classify quantum phases of matter using concepts like quantum complexity and quantum error correction. Quantum complexity considers how difficult it is to create a many-particle or many-qubit state using a quantum computer. Some quantum states require a number of computation steps that grow with system size, while others can be created in a fixed number of steps, regardless of system size. This distinction is fundamental for differentiating phases of matter.

Quantum error correction, originally developed to make error-prone quantum computers reliable, has also provided models for various phases of matter. For instance, systems with topological order allow information to be encoded in a way that it is not accessible locally; you must measure a global property to retrieve the information. This concept parallels quantum error correction, where protecting quantum information involves encoding it in highly entangled states, making it readable only through global operations. Different types of error-correcting codes correspond to different phases of matter.

In the realm of quantum gravity, quantum error correction has been equally transformative. The most concrete idea we have about quantum gravity is the holographic duality, where a bulk geometry is equivalent to a boundary theory in one less dimension. The relationship between bulk quantum gravity and the non-gravitational boundary theory can be viewed as a kind of quantum error-correcting code. For example, removing many qubits from the boundary system leaves the bulk system intact, resilient to boundary damage. This insight is now essential for understanding holography. While we are still working to apply these ideas beyond anti-de Sitter space to better understand quantum gravity in de Sitter space, I believe quantum error correction will remain crucial for these advancements.

How can we best bridge the gap between theoretical advancements in quantum algorithms and their practical implementation on real-world hardware? Or more broadly, how would you describe the relationship between theory and experiment in quantum computing compared to other fields, and how are the two evolving?

How can we best bridge the gap between theoretical advancements in quantum algorithms and their practical implementation on real-world hardware? Or more broadly, how would you describe the relationship between theory and experiment in quantum computing compared to other fields, and how are the two evolving?

The interaction between theory and experiment is vital in all fields of physics. Since the mid-1990s, there's been a close relationship between theory and experiment in quantum information. Initially, the gap between theoretical algorithms and hardware was enormous. Yet, from the moment Shor's algorithm was discovered, experimentalists began building hardware, albeit at first on a tiny scale. After nearly 30 years, we've reached a point where hardware can perform scientifically interesting tasks.

For significant practical impact, we need quantum error correction due to noisy hardware. This involves a large overhead in physical qubits, requiring more efficient error correction techniques and hardware approaches. We're in an era of co-design, where theory and experiment guide each other. Theoretical advancements inform experimental designs, while practical implementations inspire new theoretical developments.

What's the current state of qubits in today's quantum computers?

Today's quantum computers based on superconducting electrical circuits have up to a few hundred qubits. However, noise remains a significant issue, with error rates only slightly better than 1% per two-qubit gate, making it challenging to utilize all these qubits effectively.

Additionally, neutral atom systems held in optical tweezers are advancing rapidly. At Caltech, a group recently built a system with over 6,000 qubits, although it’s not yet capable of computation. These platforms weren’t considered competitive five to ten years ago but have advanced swiftly due to theoretical and technological innovations.

Can you explain what it means to have a system with 200 or 6,000 qubits?

In neutral atom systems, the qubits are atoms, with quantum information encoded in either their ground state or a highly excited state, creating an effective two-level system. These atoms are held in place by optical tweezers, which are finely focused laser beams. By rapidly reconfiguring these tweezers, we can make different atoms interact with each other. When atoms are in their highly excited states, they have large dipole moments, allowing us to perform two-qubit gates. By changing the positions of the qubits, we can facilitate interactions between different pairs.

In superconducting circuits, qubits are fabricated on a chip. These systems use Josephson junctions, where Cooper pairs tunnel across the junction, introducing nonlinearity into the circuit. This nonlinearity allows us to encode quantum information in either the lowest energy state or the first excited state of the circuit. The energy splitting of the second excited state is different from the first, enabling precise manipulation of just those two levels without inadvertently exciting higher levels. This behavior makes them function effectively as qubits, as two-level quantum systems

As we scale up from a few hundred to a thousand qubits, do we maintain the same principles?

A similar architecture might work for a thousand qubits. But as the number of qubits continues to increase, we'll eventually need a modular design. There's a limit to how many qubits fit on a single chip or in a trap. Future architectures will require modules with interconnectivity, whether chip-to-chip or optical interconnects between atomic traps.

How do error correction algorithms function in a quantum computer?

Think of it as software. Error correction in quantum computing is essentially a procedure akin to cooling. The goal is to remove entropy introduced by noise. This is achieved by processing and measuring the qubits, then resetting the qubits after they are measured. The process of measuring and resetting reduces disorder caused by noise.

The process is implemented through a circuit. A quantum computer can perform operations on pairs of qubits, creating entanglement. In principle, any computation can be built up using two-qubit gates. However, the system must also be capable of measuring qubits during the computation. There will be many rounds of error correction, each involving qubit measurements. These measurements identify errors without interfering with the computation, allowing the process to continue.

While it's possible to incorporate error correction into the hardware itself, we typically view it as an algorithm. This algorithmic approach to error correction ensures that, regardless of the quantum computing hardware in use, we can effectively manage errors and maintain the accuracy of the computations.

Does progress in quantum computing teach us anything new about quantum physics at the fundamental level?

This question is close to my heart because I started out in high-energy physics, drawn by its potential to answer the most fundamental questions about nature. However, what we've learned from quantum computing aligns more with the challenges in condensed matter physics. As Phil Anderson famously said, "more is different." When you have many particles interacting strongly quantum mechanically, they become highly entangled and exhibit surprising behaviours.

Studying these quantum devices has significantly advanced our understanding of entanglement. We've discovered that quantum systems can be extremely complex, difficult to simulate, and yet robust in certain ways. For instance, we've learned about quantum error correction, which protects quantum information from errors.

While we are gaining new insights into quantum physics, these insights aren't necessarily about the foundational aspects of quantum mechanics itself. Instead, they pertain to how quantum mechanics operates in complex systems. This understanding is crucial because it could lead to new technologies and innovative ways of comprehending the world around us.

Quantum computers, in particular, will help us broaden our understanding of emergent space-time. They will allow us to explore when and under what conditions emergent space-time can occur, especially in situations where we currently lack the analytical tools to compute what's happening. Quantum simulations will enable us to study these phenomena in a controlled setting.

One of the most exciting aspects of quantum computing is its potential to transform the study of emergent space-time into an experimental science. By investigating how highly entangled systems can be described in terms of emergent space-time, quantum computers will provide us with profound insights into this complex relationship.

Theoretical Physicists John Preskill and Spiros Michalakis describe how things are different in the Quantum World and how that can lead to powerful Quantum Computers. Produced in Partnership with the Institute for Quantum Information and Matter (http://iqim.caltech.edu) at Caltech with funding provided by the National Science Foundation.

Could quantum computing lead to experiments in string theory?

Directly testing quantum gravity through traditional experiments is impractical with today’s technologies. Therefore, we need alternative methods. One of the crucial tools we'll have for investigating and testing our ideas about quantum gravity is quantum computers. It's important to note that this doesn't mean we'll directly observe how nature behaves at that scale. Instead, quantum computers will allow us to simulate and explore models that help us understand quantum gravity.

To draw a parallel, we currently advance physics through simulations on classical computers. A notable example in high-energy physics is lattice gauge theory, proposed in the 1970s to study quantum chromodynamics (QCD) using computers. It took decades for lattice gauge theory to develop into a tool that significantly advanced the physics program. Today, it provides crucial calculations for collider physics and neutrino physics.

Similarly, I believe quantum computing will enable simulations of particle physics problems that are currently too complex for classical computers. This will help us advance our understanding of QCD and other fundamental aspects of physics. However, this progress will take time. We might not see significant breakthroughs in 20 years, but in 40 years, quantum computing could profoundly impact our understanding of fundamental physics.

Considering proposals for future colliders, should we wait for advancements in quantum computing to guide us?

No, I wouldn't recommend waiting. While it will take a long time to develop the next generation of accelerators currently being envisioned, and we may have more advanced quantum computers by then, I don't think we should delay. Yes, it may not be clear what lies ahead in particle physics and where the next discovery could lie. Various proposals to move forward are on the table with their pros and cons. The community will need to carefully determine the best path forward.

Quantum computers might not provide significant guidance in this area for quite some time, so it's crucial to continue progressing with the resources and knowledge we have now. Let's not postpone efforts in the hope of future technological advancements.

Is there a lesson from the SSC you’d like to share?

A lesson? There are many nuances, but I believe we'd have a much more profound understanding of particle physics today, in 2024, if the SSC had started operating in the 1990s as planned. Yes, it would have required a substantial investment, but the corresponding physics payoff would have been significant. Achieving great things often demands tackling challenges that take a long time to complete.

Consider LIGO, for example. Proposed in the 1980s, it seemed overly ambitious and took decades to become operational. Now, it has become a vital tool for advancing our understanding of astrophysics and general relativity at a fundamental level. This story is instructive: sometimes, important scientific advancements require patience and sustained effort.

Can quantum computation teach us something new about information as a fundamental reality?

It's challenging to turn what you just said into a concrete statement. However, the closest idea is that space-time itself might be an emergent property from something more fundamental. This concept suggests that space-time, as we perceive it, could arise from a deeper, underlying structure governed by quantum mechanics. In such a framework, the fundamental constituents wouldn't inherently involve space-time. Instead, space-time would emerge as a macroscopic property from these more basic elements.

What could this fundamental system be? We don't really know. It might be described by the rules of quantum mechanics, evolving according to a Schrödinger equation, with a Hamiltonian and all that, so you wouldn't call that just information. But it would be a system that doesn't have a fundamental interpretation of living on a space-time background. Instead, the space-time background would be an emergent property of that system.

This notion aligns with the idea that information is fundamental to our understanding of the universe. However, it doesn't entirely transform our understanding of physics into one purely based on information. While information plays a crucial role, the physical systems that process this information are still governed by the laws of quantum mechanics.

As I have mentioned, quantum computers present the potential to provide insights into these profound questions. Quantum computing offers us a unique tool to simulate complex quantum systems, shedding light on how space-time and other phenomena emerge from fundamental quantum processes. This capability is particularly valuable because many of these processes are currently beyond our reach with classical computational methods.

Moreover, the concept of emergent space-time suggests that our classical notions of geometry and locality might break down at quantum scales. Quantum entanglement, a key feature of quantum mechanics, could underpin the connectivity and structure of space-time itself. Understanding this relationship requires exploring how highly entangled quantum systems can give rise to the geometric and causal structures we observe.

This perspective deepens our understanding of quantum mechanics and has implications for fields like quantum gravity and string theory. It opens new research avenues, where theoretical developments can be tested through quantum simulations. One exciting aspect of quantum computing is its potential to turn theoretical concepts about emergent space-time into experimental subjects, allowing us to study these ideas in a controlled setting.

Would quantum information science benefit from an international organization like CERN?

It’s an interesting question. You know, from my perspective as a physicist, quantum information—I'll use the broader term—is a tool for discovery. It's allowing us to explore physics in ways we never could before, which would seem to make it a good candidate for coordinated international investment. But where it's different from, say, particle physics, or other big projects, is that there's a strong economic incentive which is driving investment. Tech companies are ramping up their efforts, venture capital is flowing in, and so on. Companies are motivated by the potential for technological breakthroughs and practical applications, leading to substantial funding and rapid advancements.

That said, public sector support remains crucial for long-term vision and achievements that extend beyond the tech industry's typical short-term focus. The tech industry often operates on a horizon of five to ten years, while major scientific advancements may require decades of sustained effort and investment. Universities, national laboratories, and potentially international collaborations can provide this necessary long-term perspective.

While I can't comment specifically on the CERN model, I do believe that increased international cooperation would greatly benefit the field of quantum information. The challenges and opportunities in quantum information science are global. International collaboration can pool resources, share knowledge, and accelerate progress in ways that individual efforts might not achieve.

In quantum computing, we need ideas and we also need the technology. We'd like to have much better qubits that are much less error-prone. What will it take to make much better qubits? Well, that's a research problem. Part of it would be materials science, probably, advances in materials with an eye on making qubits that are less noisy. People are working on that, but not in a coordinated way of sufficient scale. That's something for which, potentially, companies and governments can see as a shared goal because it will benefit everybody.

Moreover, there's a need for public investment to support foundational research that might not have immediate commercial applications but is vital for the field's long-term health. This includes developing better qubits, advancing error correction techniques, and exploring new quantum algorithms. These efforts require a level of sustained funding and collaboration that the private sector alone might not provide.

Additionally, the national security implications of quantum computing, particularly its potential to break classical cryptographic codes, add a layer of complexity to international collaboration. Governments must balance the benefits of cooperation with the need to protect sensitive information. Nevertheless, I believe that the global nature of the scientific enterprise will ultimately drive increased collaboration.

In particle physics or astrophysics, the primary benefit is enhancing our understanding of the universe. In contrast, quantum computing offers practical benefits, driving economic incentives. While the exact applications are still uncertain, the most promising areas include chemistry and materials science, where the potential for profit is clear. This field represents a significant advance in our understanding of quantum matter and complex molecules. In summary, while private investment is accelerating progress, public sector support and international cooperation are crucial for achieving the long-term vision and foundational advancements necessary for quantum information science to reach its full potential.

Any advice for those entering the field of quantum information science?

There's a tremendous amount of excitement among young scientists in the field of quantum information, which is fantastic and accelerates progress. While I'm cautious about giving universally applicable advice, I can share what I believe contributes to the field's appeal and impact: its interdisciplinary nature.

As someone initially trained in high-energy physics, I've had to expand my knowledge significantly. I've delved into computer science, experimental physics, chemistry, and electrical engineering. This interdisciplinary approach has been crucial. Understanding quantum information science requires a blend of skills and knowledge from various fields.

Quantum information science is an inherently interdisciplinary field. It bridges physics, computer science, and engineering, and it benefits from insights across these domains. This breadth is vital for the future evolution of the field. Therefore, I encourage young scientists to seek a broad intellectual foundation in their training. Embrace the interdisciplinary spirit, as it will equip you to tackle the diverse challenges and opportunities in quantum information science.

Could you share a sci-fi movie or book that inspired you over the years?

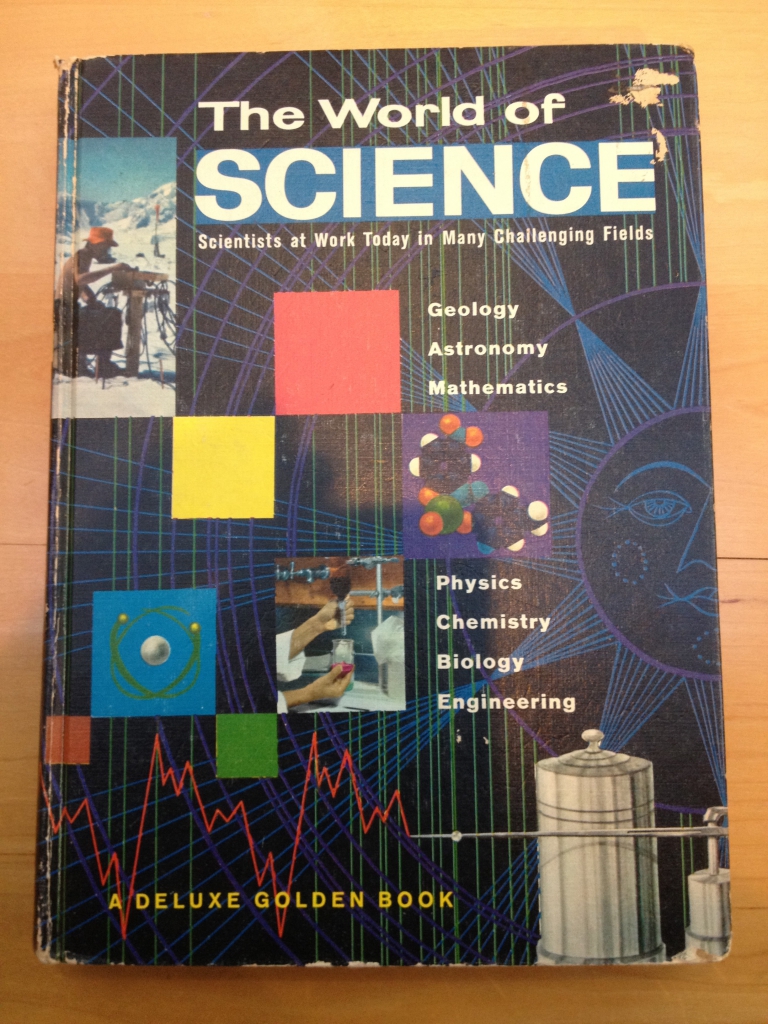

Let me take you back to my childhood for this. I think this story highlights the importance of outreach in sparking a young person's interest in science. When I was ten years old, I received a book called The World of Science. At the time, I had no idea that each chapter was based on interviews with Caltech faculty members, where I now work. The chapter on theoretical physics captivated me the most.

It wasn't until years later that I realized those interviews were with legends like Richard Feynman and Murray Gell-Mann. The book, published shortly after the discovery of parity violation, explained the concept that physics in a mirror is different from physics in real life. It even described the beta decay of cobalt-60, showing how an electron would behave differently based on the direction of its emission.

I found it astonishing that the world could differ in a mirror and that you could discern this difference. This revelation left a profound impact on me, fueling my excitement for science and specifically drawing me towards fundamental physics. Remarkably, about 20 years after reading that book, I became a colleague of Feynman and Gell-Mann at Caltech, which was incredibly exciting for me.

John Preskill's copy of The World of Science by Jane Werner Watson, purchased in 1962 when he was in the 4th grade.

Note: A few days after this interview it was announced that the Eight Biennial John Stewart Bell Prize for Research on Fundamental Issues in Quantum Mechanics and Their Applications will be awarded to John Preskill (Richard P. Feynman Professor of Theoretical Physics, California Institute of Technology) at the 10th International Conference on Quantum Information and Quantum Control.