Extending ATLAS Physics Reach with Analysis Reuse Technology

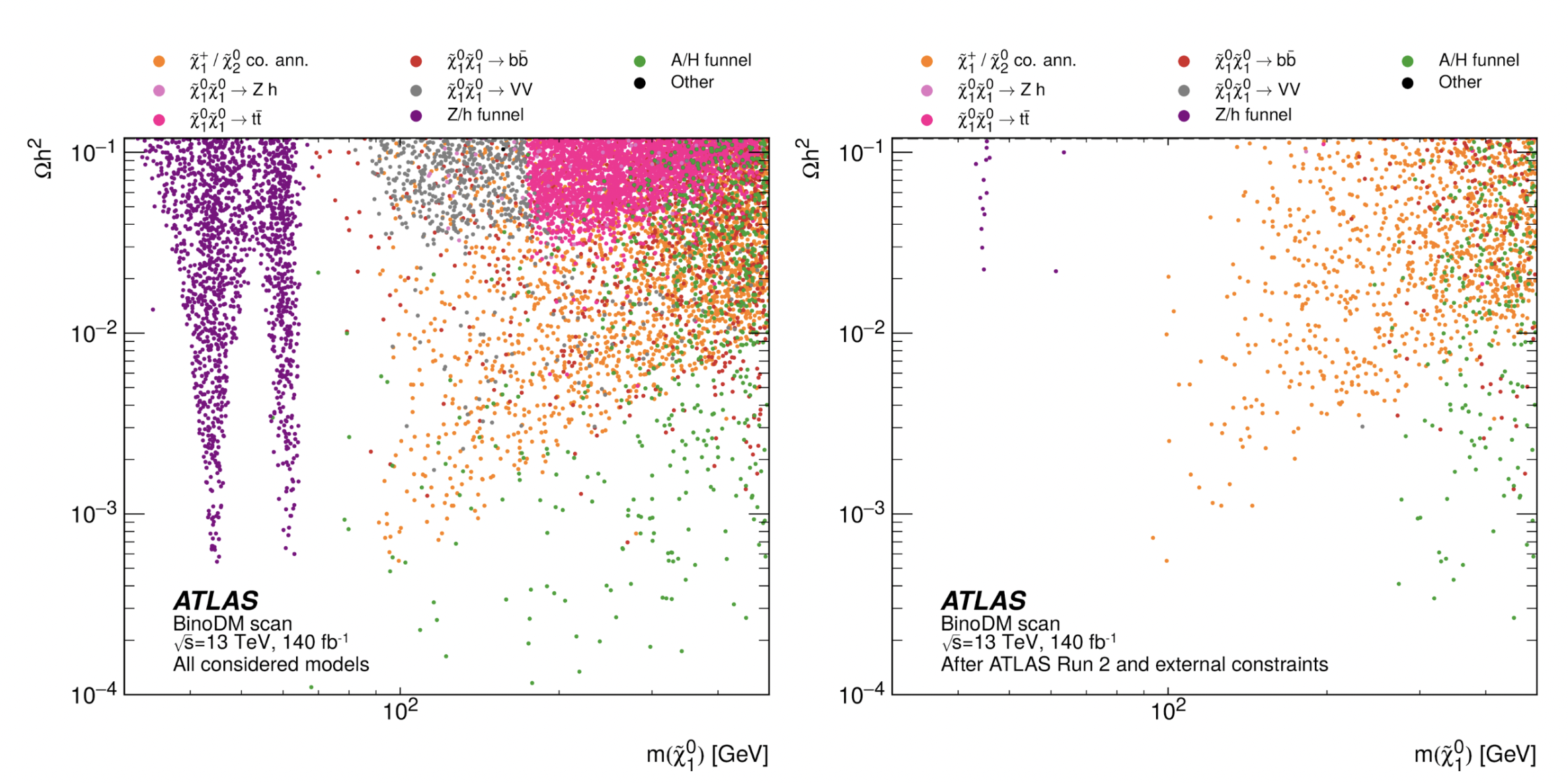

In August 2023, the ATLAS Collaboration presented the most comprehensive results to date of searches for electroweak production of supersymmetric particles interpreted within the Phenomenological Minimal Supersymmetric Standard Model (pMSSM) theoretical framework. This result was a tour de force from ATLAS’s supersymmetry physics group, pulling together results from eight separate ATLAS searches using data collected during Run 2 of the LHC and evaluating tens of thousands of supersymmetric models in the 19-dimensional parameter space to set new, more restrictive constraints on models with supersymmetric dark matter particles. This analysis extended the work done in Run 1 of the LHC, as seen in Figure 1, by taking advantage of a broader range of search channels strategically selected during Run 2 and also by leveraging several new technologies that allowed ATLAS to push past the steep computational costs of an analysis of this scale.

Figure 1: The distribution of unexcluded supersymmetry models as a function of two model parameters involving dark matter, shown before (left) and after (right) the results of the 2023 ATLAS pMSSM analysis. The efficient use of new technologies, including RECAST and REANA, allowed for the analysis to evaluate and exclude nearly all models in “funnel regions” where the mass of the dark matter candidate (horizontal axis) is approximately half the mass of the the Higgs boson or Z-boson. (Image: ATLAS Collaboration, arXiv:2402.01392).

Among the new technologies was the ATLAS implementation of the RECAST analysis preservation and reuse framework. ATLAS has used RECAST to fully preserve the analysis workflow logic and complete software environments of searches for new physics in an analysis catalogue of executable workflows that began with work for the Run 1 ATLAS pMSSM analysis in 2015. This level of full analysis preservation enables rerunning the detector-level analysis on demand with an alternative new signal model, resulting in preserved analyses becoming reusable tools for searches for new physics in similar kinematic regions as the original analysis. Leveraging RECAST to reuse the full detector-level analyses for supersymmetric models that could not be clearly excluded in a pass of the analysis using a simplified particle-level evaluation was key to the power of the pMSSM analysis. However, evaluating a full analysis workflow for each of the multiple thousands of models in the considered theory landscape presents a large computational challenge that previously had been intractable due to compute resource scale and coordination.

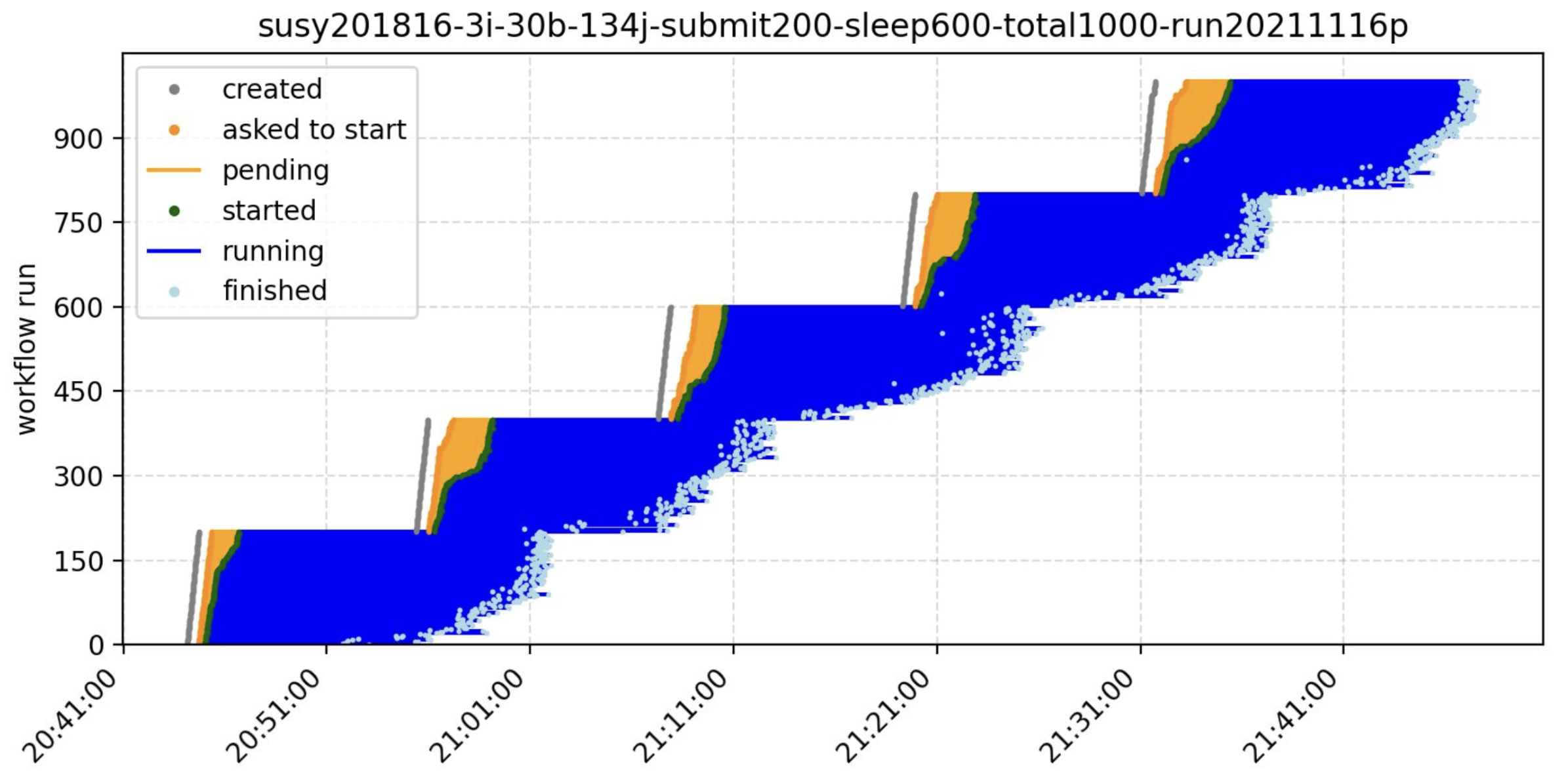

RECAST builds upon workflow language technology to support complex analysis logic and Linux containers to create distributable “image” snapshots of the full analysis software environment, making it a great match for the CERN REANA reproducible research data analysis platform — originally designed to run analyses preserved in systems like RECAST. As REANA is based on technology native to cloud computing environments, it has the ability to scale containerized workloads described by workflow languages efficiently across thousands of computing cores, as seen in Figure 2. With RECAST workflows deployed to REANA, the ATLAS pMSSM analysis team was able to harness the computing power to complete full analysis passes in short periods of time, allowing for faster and more informed analysis iterations. These technologies made it feasible for the analysis to search a significantly wider area of parameter space than ever before at the LHC, allowing for a survey of what pMSSM models had been missed by previous analyses. Collecting these unexcluded models also informs the parameter spaces of future searches to maximise coverage.

Figure 2: A visualisation of a REANA scaling test submitting test (without systematic variations) pMSSM workflows (workflow number on the vertical axis) on a periodic basis (compute datetime on the horizontal axis). The REANA cluster with over 1000 cores is able to comfortably scale the compute demand to accommodate running the incoming workloads (dark blue) while minimising the number of workflows that are waiting for resource allocation (light orange). Image: Marco Donadoni et al., arxiv:2403.03494, CHEP 2023 proceedings).

This implementation of the ATLAS pMSSM analysis is the first of its kind in scale and coverage, though it also offers a glimpse at a new paradigm of analysis combinations for Run 3 of the LHC and beyond. As these powerful and flexible analysis technologies continue to mature and are stress tested at the LHC, they can significantly lower the barrier for more advanced future combinations with increased sensitivity across multiple searches for new physics. However, new technologies also require adoption time by the LHC experimental collaborations, and need domain experts to educate the collaborations in use and application to problems. Understanding the intersection of technologies and experiment needs has been the impetus for the first workshop on workflow language usage in high energy physics — to be held at CERN in April, 2024 — that will bring together experts from the LHC experiments and the broader scientific open source community to inform future pathways and technology development. With the majority of the multidecadal LHC physics program still in front of us, analysis reuse technologies may be an addition to physicists' trusty toolkits to help enable new pathways for discovery.

Further reading:

- ATLAS Collaboration. ATLAS releases comprehensive review of supersymmetric dark matter, ATLAS Physics Briefing, August 2023. https://atlas.cern/Updates/Physics-Briefing/SUSY-Dark-Matter

- Lukas Heinrich. RECAST: A framework for reinterpreting physics searches at the LHC, CERN EP Newsletter, December 2019. https://ep-news.web.cern.ch/recast-framework-reinterpreting-physics-searches-lhc

- Cranmer, K., Yavin, I. RECAST — extending the impact of existing analyses. J. High Energ. Phys. 2011, 38 (2011). https://doi.org/10.1007/JHEP04(2011)038

- Chen, X., Dallmeier-Tiessen, S., Dasler, R. et al. Open is not enough. Nature Phys 15, 113–119 (2019). https://doi.org/10.1038/s41567-018-0342-2

- ATLAS Collaboration. Summary of the ATLAS experiment’s sensitivity to supersymmetry after LHC Run 1 — interpreted in the phenomenological MSSM. J. High Energ. Phys. 2015, 134 (2015). https://doi.org/10.1007/JHEP10(2015)134

- ATLAS Collaboration. ATLAS Run 2 searches for electroweak production of supersymmetric particles interpreted within the pMSSM. (2023). https://arxiv.org/abs/2402.01392

- Donadoni, M. et al. Scalable ATLAS pMSSM computational workflows using containerised REANA reusable analysis platform. (2024). https://arxiv.org/abs/2403.03494