G4HepEm: accelerated electromagnetic shower simulation for the HL–LHC

When the High–Luminosity LHC (HL–LHC) starts delivering data, the volume of simulated events required to control systematic uncertainties will rise sharply. In ATLAS, detailed detector simulation already consumes a large fraction of the computing budget; in CMS, it is expected to grow substantially with the Phase-2 upgrade, notably because of the high-granularity calorimeter (HGCAL). Making detailed simulation faster—without sacrificing physics quality—directly translates into more events and smaller uncertainties for the same computing resources, or equivalently fewer CPU-hours and lower energy per event, contributing to greener computing.

Geant4 is designed to model many particle types with a single, highly flexible tracking algorithm. That generality is powerful, enabling the toolkit to cover a broad spectrum of particle–matter interactions—a flexibility that has been pivotal to its success for nearly three decades across diverse application domains, from high–energy physics to space science, medical physics and industry. In LHC detector simulation, however, the required EM physics is well defined and the computing cost is dominated by a small set of particles—electrons, positrons and photons—so much of that flexibility remains unused. Streamlining the transport of this small set of particles can provide a way to speed up detector simulation as a whole.

What G4HepEm is

G4HepEm is a new extension to Geant4 that specialises the transport of electrons, positrons and photons—the three particle species that dominate tracking time in LHC detector simulations. The principle is simple: retain Geant4’s electromagnetic (EM) physics precision required for HEP detector simulations, but replace the generic tracking loop and step computation with specialised ones for the most performance-critical particles. The result is a tangible performance gain in full experiment frameworks, with physics outputs that remain consistent with native Geant4 simulations.

G4HepEm focuses only on electrons, positrons and photons providing specific, streamlined implementation for their transport. Moreover, having specialised tracking loops for e± and γ enables the efficient implementation of further, particle–specific algorithmic optimisations:

- for e±, multiple scattering is combined with transportation in a single streamlined loop;

- for γ, an optional Woodcock (delta–tracking) mode reduces the number of neutral steps in granular geometries.

Everything else in the application — geometry, navigation, user actions — remains exactly as before. In practice, experiments enable a dedicated tracking manager for e±/γ, and the rest of Geant4 continues unchanged.This keeps both integration risk and effort low while making testing and validation easier.

From theory to experimental setups

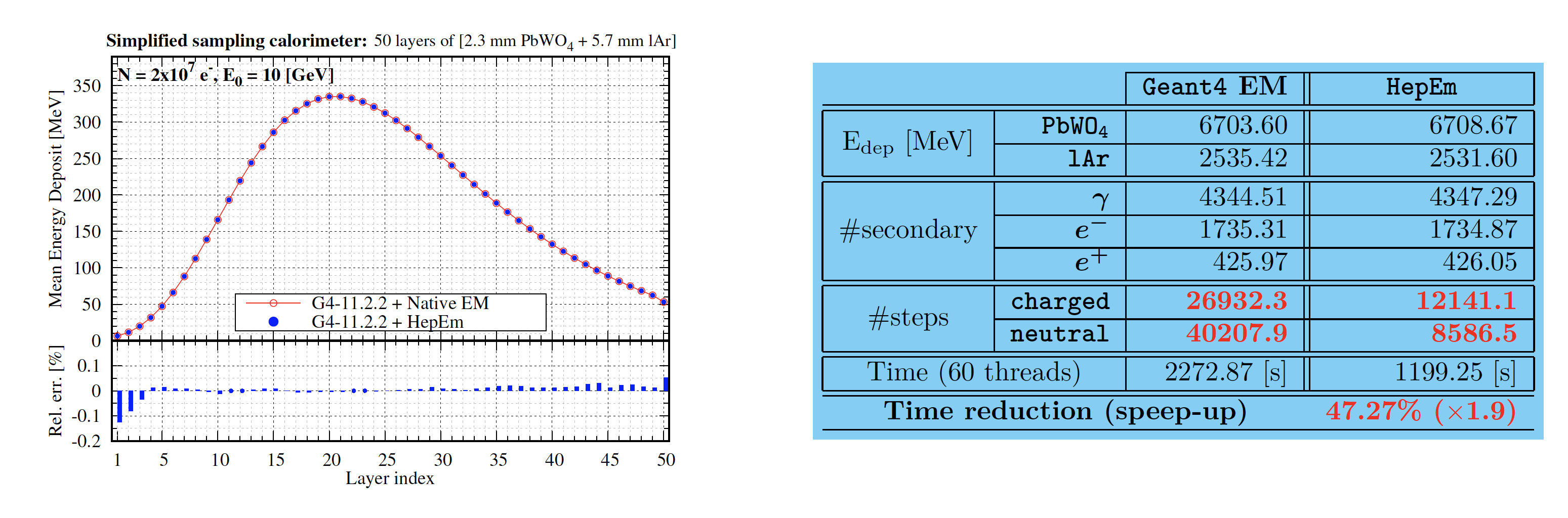

The computational benefits offered by G4HepEm were first studied in a controlled calorimeter setup that showed that energy flow and secondary production agree with those of native Geant4 within statistical precision (Fig. 1). This is expected, as G4HepEm reimplements the same EM physics within a more efficient transport engine that, by default, preserves not only the detailed physics but also the native simulation step structure. The optional, particle-specific algorithmic optimisations applied in this study can yield substantial additional performance gains by reducing the number of required simulation steps without modifying the physics, as also demonstrated in Fig. 1.

Figure 1: Simulation of EM shower generated by a 10 [GeV] primary e− beam in a simplified sampling calorimeter using the native Geant4 and dedicated G4HepEm tracking of e±/γ. The longitudinal profile (left, using 2 × 107 primaries), the mean energy deposit, and secondary production statistics (right, based on 106 primaries) show excellent agreement with ~ 2× speed up.

The real evaluation is however the one with the LHC experiments, in particular ATLAS and CMS. The technical and functional integration of G4HepEm in ATLAS (Athena) and CMS (CMSSW) made possible to assess physics and computing performance in the experiments’ current production configurations 1. In case of ATLAS we performed full physics validation and observed excellent agreement with native Geant4 across calorimeter and tracking observables; in CMS we carried out hit–level verification with similarly good agreement. Regarding the computing performance (Fig. 2):.

- ATLAS (Run–3 configuration): in a standard tt̄ workflow, wall–time per event improves by around 20% on a modern multicore node, with observables in excellent agreement.

- CMS (Run–3 and Phase–2): in CMSSW tests, we observe ~14% speed–up for Run–3 and ~20% for Phase–2 geometries with HGCAL, while maintaining agreement at the level of calorimeter hits. In CMS software, G4HepEm is integrated and available for use by default.

For context, ahead of Run 3 we already made available to ATLAS a limited variant of Woodcock (delta–tracking) used only in the electromagnetic End–Cap calorimeter (EMEC). This delivered an ~18% reduction in detector–simulation time on the grid during Run 3. The G4HepEm gains reported above are additional (~20%) improvements that experiments can harvest through the HL–LHC era.

At the time of writing, an officially approved and fully validated Run–4 simulation configuration is still not available for ATLAS; however, tests on the available candidate setup show a comparable ~20% simulated event–time reduction to that observed for the Run–3 configuration reported above.

Figure 2: Mean event processing time when simulating top–antitop (tt̄) events in ATLAS (Athena) Run 3 (left) and CMS (CMSSW) Run 4 (right) configurations using the native Geant4 and dedicated G4HepEm tracking of electrons, positrons and photons.

Outlook

The LHC experiments plan to meet HL–LHC computing goals by combining detailed simulation with fast simulation. Improving the efficiency of detailed Geant4 helps on both fronts: it maximises the fraction of samples that can be produced with full fidelity, and it reduces pressure on fast–simulation tuning and validation. Because G4HepEm touches only the transport mechanics for e±/ and leaves physics models intact, it is a natural optimisation to deploy broadly where detector simulation dominates the computing budget.

G4HepEm is delivered as a compact runtime library for CPUs and GPUs, sharing the same physics implementation. It plugs into Geant4 through a dedicated tracking manager, which is the same interface used by ongoing GPU R&D efforts (e.g. AdePT in our EP-SFT group). This gives experiments a single EM implementation across CPU and GPU back–ends, simplifying validation and mixed workflows.

In summary, G4HepEm brings specialised electromagnetic transport to Geant4–based detector simulation. It delivers double–digit wall–time reductions in full experiment workflows, and up to ×2 in EM–dominated set–ups, without changing physics outputs. It is integrated today in ATLAS and CMS and is ready to help the LHC experiments meet their HL–LHC computing targets.

Notes

1 The figures quoted here are indicative and not official ATLAS, CMS collaboration results. ↩︎

Contact: mihaly.novak@cern.ch (EP–SFT)

Cover image: S Sioni/CMS-PHO-EVENTS-2021-004-2/M Rayner