Intelligent Design for Particle Detectors

Particle detectors are becoming ubiquitous: originally designed as tools for fundamental physics (collider physics experiments, neutrino observatories, etc.), they are also increasingly used in medical and industrial applications. For all use cases, the main challenge in the design of a detector can always be described by the same terms: achieving the best practical outcome (e.g. detection sensitivity or physics reach) given technological and funding constraints.

Whatever their use, these instruments have steadily grown in complexity over time: several detector technologies are routinely exploited both in the same set-up and jointly with new design options whose performances are often inter-connected in subtle ways. As a consequence, the traditional and time-honoured approach of optimizing the detector parameters in blocks, or sequentially, is less guaranteed to yield optimal outcomes, while a simultaneous scan of all key parameters becomes less and less sustainable.

Fortunately, the software paradigm of differentiable programming (DP) has rapidly gained ground in various areas of technology, as it is prerequisite for modern machine learning. Using DP, the user has access to exact derivatives of functions, with only a small computational overhead by the use of automatic differentiation (a clever application of the chain rule). When used in a gradient-descent based optimization algorithm, DP allows solving super-human tasks such as the simultaneous optimization of millions of parameters of a machine learning model or—in our case—of a detector. This is the broad goal of a recently-formed consortium of physicists (from various subfields) and computer scientists: the MODE Collaboration (for Machine-learning Optimized Design of Experiments) [1, 2].

The path from the parameters of an individual sensor to their impact on the final physics aim, e.g. the precision on Higgs couplings, is a long one, and requires many steps that need to be expressed in a differentiable way. However, even targeting only individual building blocks can already improve our physics reach. Besides stunning examples e.g. from the reconstruction of individual physics objects, or new paradigms for reconstructing multiple particles directly from detector hits [3], differentiable programming techniques have also found their way into the optimisation of the analyses themselves [4, 5]). The MODE Collaboration seeks to connect these existing building blocks and integrate them into a fully differentiable chain: from the detector simulation, the downstream processing and the reconstruction algorithms, up to the analysis.

In practice, this can be quite a daunting task, since—besides these examples—most of the programs and algorithms currently used by the community are not (written to be) differentiable. Fortunately, it is possible to break down the various steps into individual components and even replace some of these components with differentiable surrogate models, which have already been successfully used e.g. in a study to optimise the SHiP muon shielding [6] or for accelerator control [7].

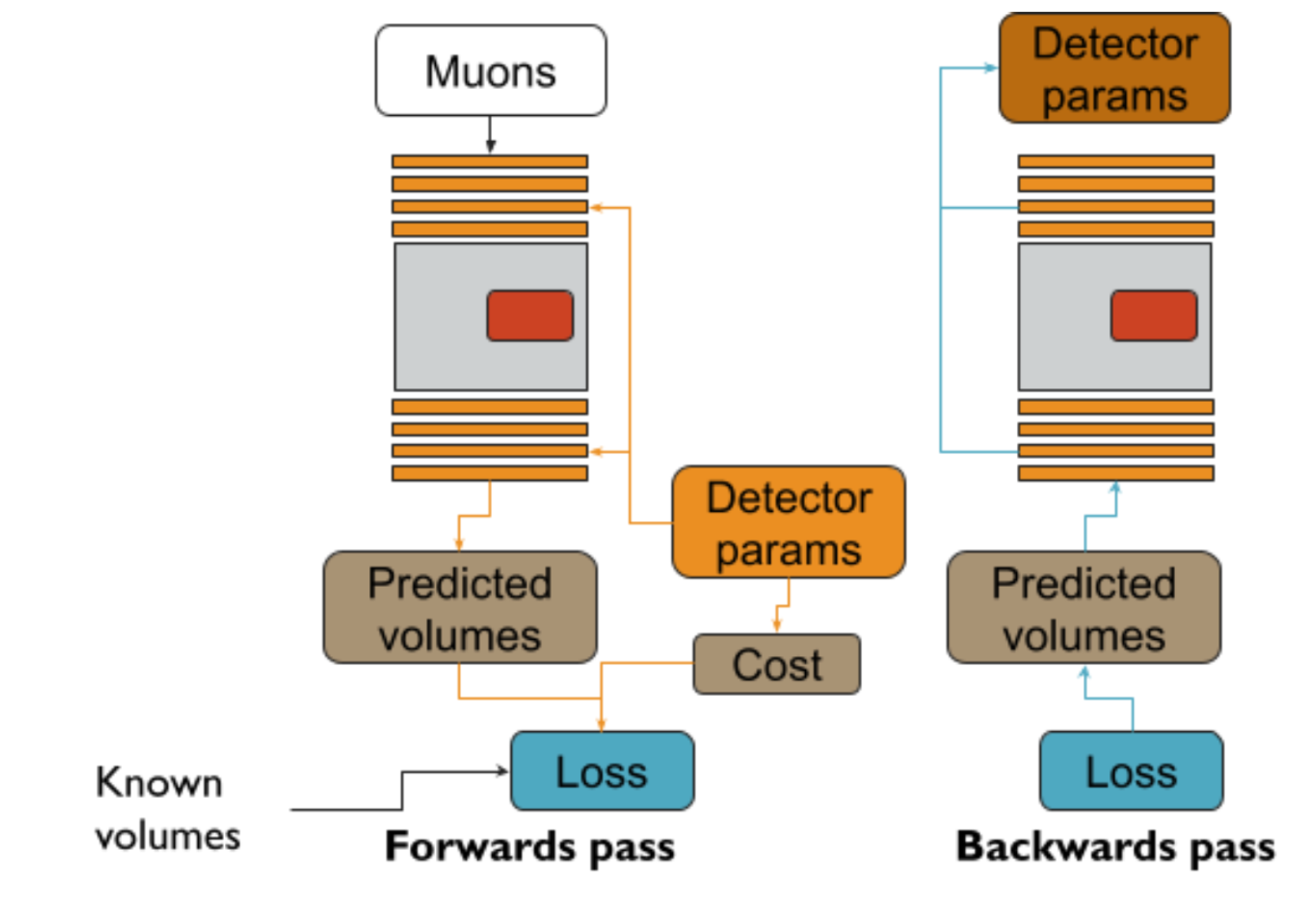

Once the measurement pipeline has been made fully differentiable, it becomes possible to compute how each part of the detector affects the final sensitivity of the measurement. The detector modules may then be optimised in such a way that the sensitivity is maximised, whilst also accounting for the costs of the components (and other budgetary constraints, be they heat, size, power consumption, et cetera).

The images below give an example of such a process in the context of muon-tomography using the in-development TomOpt package.

Figure 1: Example of TomOpt optimising a detector for classifying the presence of uranium blocks inside containers of scrap metal. The detector panels (shown in the bottom half) move from a sub-optimal starting configuration to cover the container, resulting in improved classification power. The loss function also accounts for the cost of the detector system, which prevents the panels from becoming too large.

Figure 2: An illustration of the optimisation process in TomOpt. A loss is computed based on the current setup of the detector for a range of predictions on different volumes. This is then differentiated with respect to the detector parameters, which are then updated via gradient descent.

We emphasize that these tools do not aim at taking over the decision making process of detector design, but on the contrary we envisage them to become part of a “human in the middle” system, in much the same way as packages like GEANT4 allow researchers to simulate their design. These new tools can assist researchers in making more informed design choices, efficiently exploring less-orthodox configurations that may otherwise be overlooked but could nonetheless be performant. An example of such an unorthodox-yet-well-performing design choice is the evolved antenna, which has been successfully tested in the NASA missions ST5 and LADEE.

The concepts outlined above are applicable to a large set of challenges in particle physics and astrophysics, as also described in a recent white paper [8]. Proton Computed Tomography experiments and low-energy particle physics experiments can also profit from joint optimization techniques. In astroparticle physics and neutrino experiments, apparatuses for gamma-ray astronomy and interferometric gravitational wave detection can also be optimized with differentiable programming, finding optimal solutions given the limited budget funding agencies imposed on the projects. Cosmic-ray muon imaging is one of the most immediately promising fields, providing a manageable level of complexity that is a good test bed for building a differentiable pipeline from scratch. The MODE Collaboration has already begun prototyping a software package, TomOpt (see e.g. this presentation), to research and develop techniques for detector optimisation in this field. The technique is being benchmarked on applications ranging from contraband detection to identification of inner cracks in statues needing restoration. In addition, MODE collaboration members are currently investigating calorimeter optimization for the LHCb upgrade as well as new calorimeter designs, as the shift towards high granularity detectors and the challenges of more dense environments motivate new approaches in this area.

In the context of these efforts, a community is forming, fuelled by annual workshops organized by the MODE Collaboration, whose next instance will take place this September (registrations are open [9]!).

References

[1] MODE Collaboration, website

[2] MODE Collaboration, Toward Machine Learning Optimization of Experimental Design, Nuclear Physics News International 31, 1 (2021)

[3] J. Kieseler, Deep Neural Networks for particle reconstruction in high-granularity calorimeters, EP newsletter March 2020

[4] P. Castro, T. Dorigo, INFERNO: Inference-Aware Neural Optimisation, arXiv:1806.04743, Computer Physics Communications 244 (2019) 170-179

[5] N. Simpson, L. Heinrich, neos: End-to-End-Optimised Summary Statistics for High Energy Physics, arXiv:2203.05570

[6] S. Shirobokov, et al. Black-Box Optimization with Local Generative Surrogates, arXiv:2002.04632

[7] A. Edelen, Neural networks for modeling and control of particle accelerators, dissertation, Colorado State University. direct link

[8] T. Dorigo, A. Giammanco, P. Vischia (eds.) et al, Toward the End-to-End Optimization of Particle Physics Instruments with Differentiable Programming: a White Paper, arXiv:2203.13818

[9] P. Vischia, T. Dorigo, N. Gauger, A. Giammanco, G.C. Strong, G. Watts, Second MODE Workshop on Differentiable Programming for Experimental Design, website (registrations open)