System-on-Chip in Particle Physics Experiments

Many experiments in particle physics use custom-built electronics boards in order to perform event triggering and data readout. For many years now, those electronics boards have been using Field-Programmable Gate Arrays (FPGAs) for processing data. An FPGA is a type of integrated circuit that is reconfigurable and that provides fast and massively parallel computing. FPGAs are very efficient at what they do and therefore very interesting for the purpose of the experiments, but they usually require using a dedicated programming language and knowledge of the internal structure of the device, which makes them difficult to use for control and monitoring. Therefore, the idea to combine FPGAs with a Central Processing Unit (CPU) in order to integrate them into general-purpose computing systems and to provide a simple way of controlling and monitoring came up very early. However, previous generations suffered from the lack of processing power, either because the CPU was emulated in the FPGA (soft-CPU) or because it was a real CPU (hard-CPU) of a rather low processing frequency.

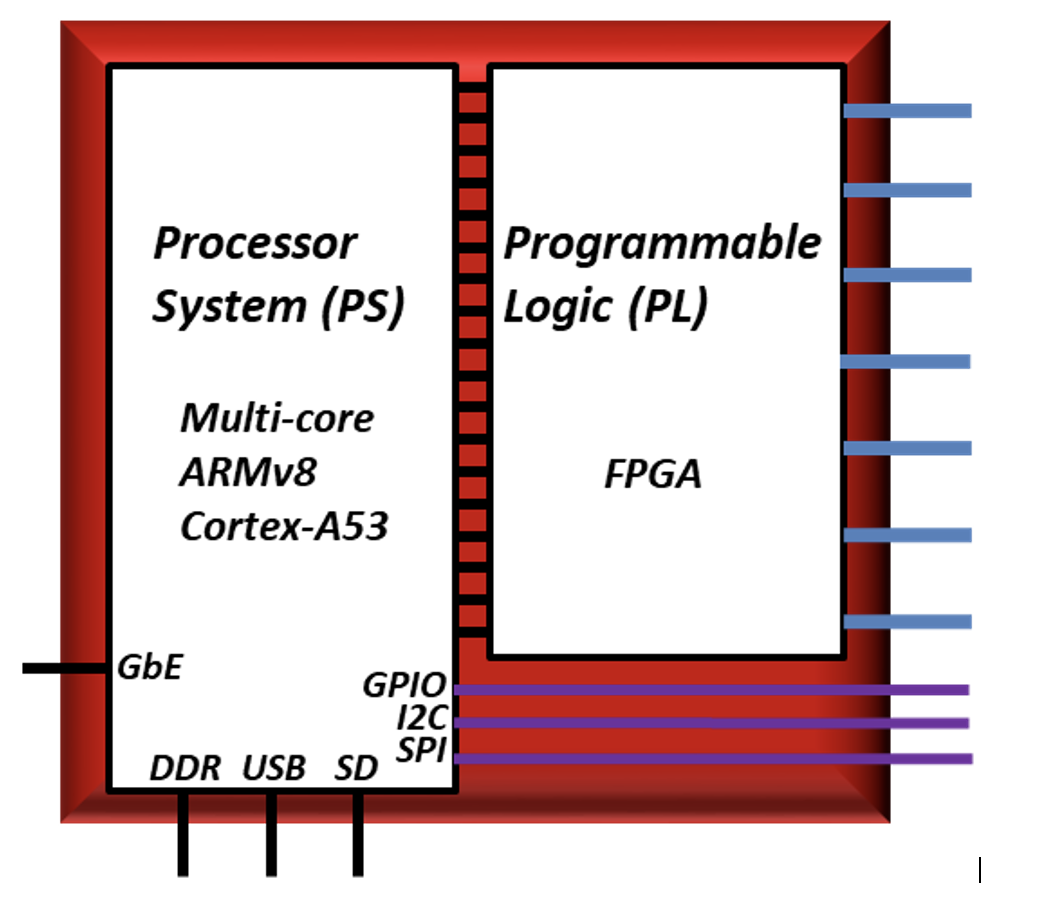

In both cases, it required dedicated software because of the scarce computing resources. This is known as embedded programming and has its own challenges. However, recently a new generation of FPGAs appeared, in which the latest generation of FPGA technology was integrated into the same chip with a powerful processor system of a number of CPU cores. This allows general-purpose computing with a fully-fledged operating system comparable to what we know from phones or laptops. This new generation of chip is called a System-on-Chip (SoC), because it integrates all or most of the components of a computer or electronics system into one integrated circuit. This definition of a SoC is relatively large, but in the field of electronics for particle physics experiments, a SoC has become known more specifically as a chip with an FPGA and a processor system.

Figure 1: System-on-Chip (SoC) = Processor System + Programmable Logic.

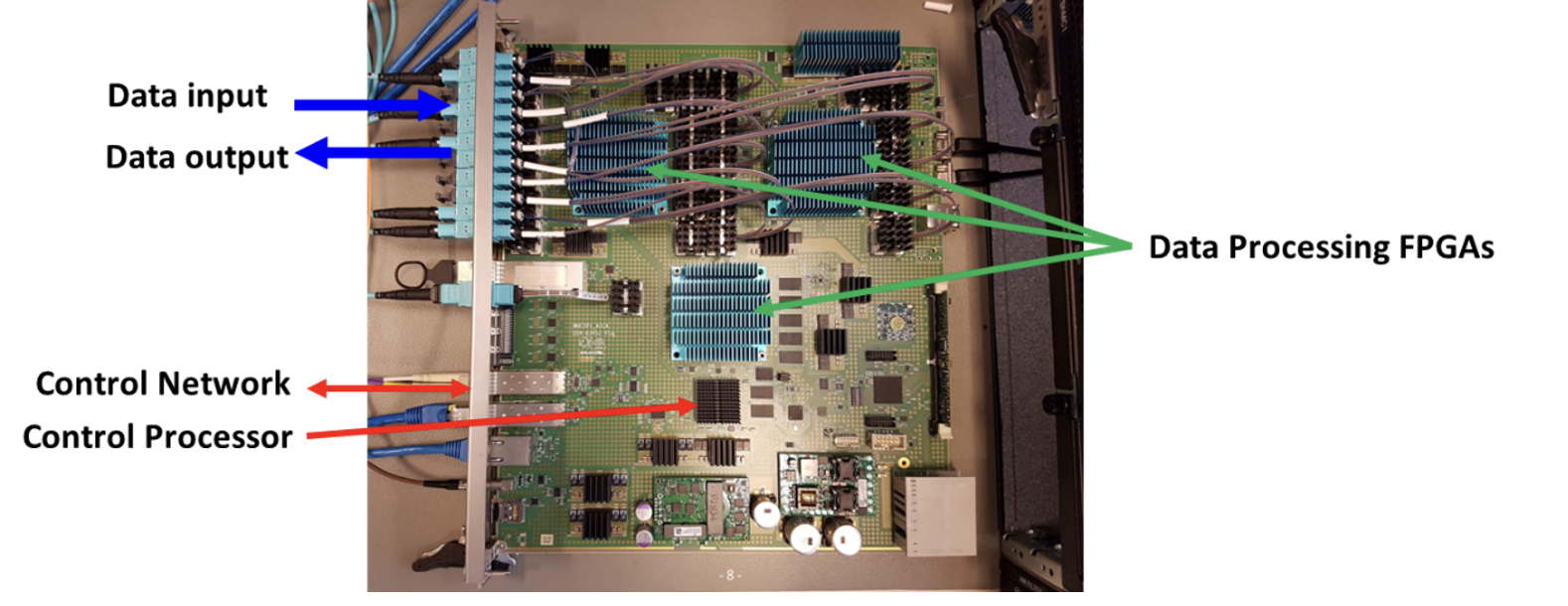

A SoC is at the interface between software and hardware. The hardware part is the Programmable Logic, which is well known to electronics designers as the FPGA. The software part is the Processor System. This combination provides large flexibility, and allows one to integrate the electronics modules directly into the control network of an experiment. Linux is used as operating system easing integration with the online computing, which deploys Linux already on the server PCs. Many electronics modules for trigger and readout in particle physics experiments have a similar structure: they have hundreds of high-speed links running at tens of GBit/s for data input and output, several high-end FPGAs for fast and parallel data processing, and a SoC for control and monitoring of all on-board components and for connecting to the experiment’s control network. Further possible use cases of the SoC on the electronics module include the use of interactive tools, the integration with the detector control system for control of the hardware, the integration into the run control system for operation control, and data processing, calibration, and monitoring. The ATLAS Muon-to-Central-Trigger-Processor Interface (MUCTPI), see Figure 2, is an example of a module to be used in the coming upgraded trigger system of ATLAS which makes use of the SoC technology.

Figure 2: Typical Electronics Module: the ATLAS MUCTPI using a SoC.

The SoC Workshop

A workshop on SoC was recently held at CERN, on June 7 to 11 (https://indico.cern.ch/event/996093). This workshop was the second one after a first one held in June 2019 (https://indico.cern.ch/event/799275) with the intention to share knowledge and expertise on SoC. Since it was considered a success, and due to the increasing interest in SoC and the growing use of SoCs in the community of particle physics experiments, a follow-up from the first workshop was organized, thus starting something that is becoming a series. The workshops, which aim at bringing together vendors, electronics engineers, software developers and system architects of particle physics experiments, beams and radioprotection, are organized by a committee at CERN, which includes representatives from ATLAS, CMS, the EP electronics group and the Accelerator and Technology sector. In addition, an interest group “System-on-Chip at CERN”, created following the ACES 2018 workshop is holding quarterly meetings on news and updates on use cases (https://twiki.cern.ch/twiki/bin/view/SystemOnChip/WebHome).

The recent 2nd workshop took place virtually on five afternoons. On the first day, the major vendors presented the latest and newest in that field. The second and third day were dedicated to overview presentations on the use of SoC in ATLAS, CMS and the Accelerator & Technology sector, followed by a number of dedicated project reports on the use of SoC for Phase-1 and Phase-2 LHC projects. On the fourth day, three tutorials provided an introduction into using continuous integration for the design and test of firmware, a framework for failsafe booting, as well as a fully automatized building of all the system and user software based on continuous integration. Finally, the fifth day was dedicated to system-administration aspects, which allow integrating the SoC-based system into the experiments.

Figure 3: The ATLAS L1Calo TREX Module with its SoC visible as the silver square in the right upper part of the module.

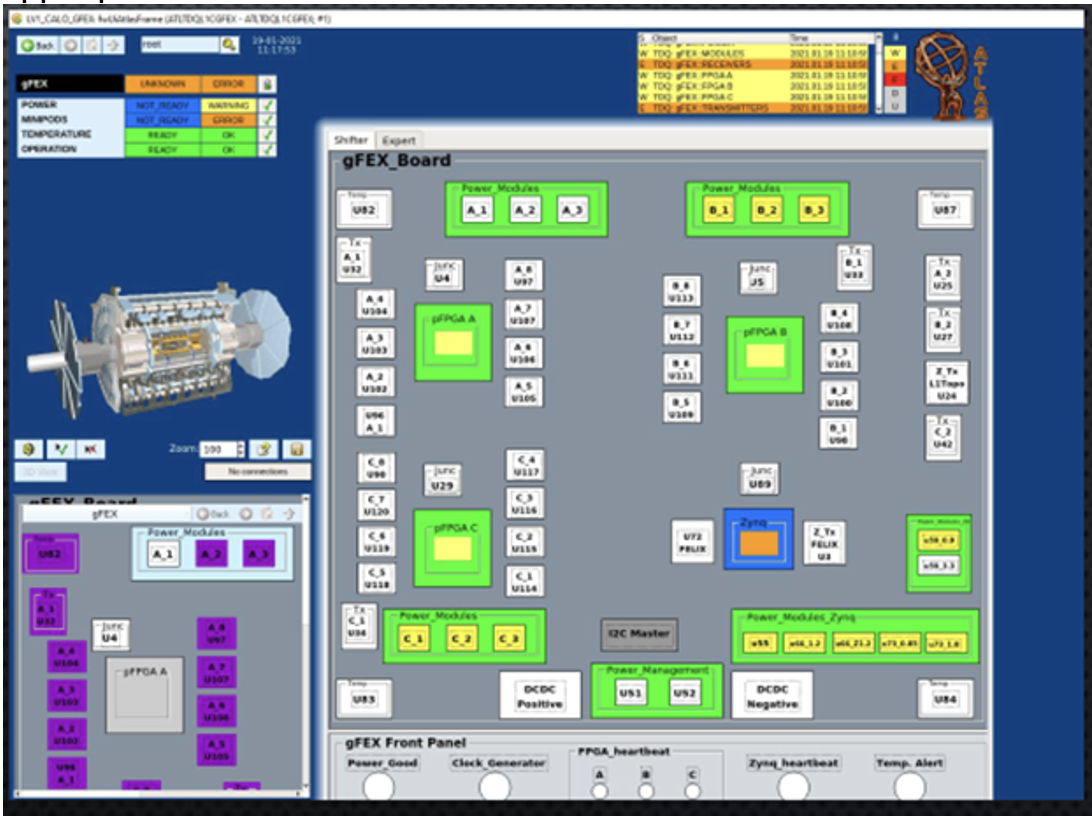

Two examples of the use of the SoC in Phase-1 upgrade projects are part of the ATLAS Level-1 Calorimeter (L1Calo) Trigger. They are two different types of boards, but both use the SoC for similar purposes: the Tile Rear EXtension (TREX) and the global Feature EXtracture (gFEX). The TREX (Figure 3) is a rear-transition module (RTM) developed to connect to the existing 9U VME front module for the L1Calo Trigger pre-processor. The gFEX is a front board built with a new technology for modular electronics called ATCA (Advanced Telecommunications Computing Architecture), which is replacing the previous generation VME bus. Both systems use the SoC for configuration of all on-board devices, for programming of all the power modules, and for reading operational data of all the hardware components, like voltages, currents, and temperatures. The operational data are continuously being collected and transferred into the detector control system using OPC-UA (OPC - Unified Architecture), which is a machine-to-machine communication protocol used for industrial automation. The data are presented in GUI panels that were developed in order to provide a straightforward interface between the board and the users (Figure 4).

Figure 4: A GUI panel with operational hardware data of the ATLAS Global Feature Extractor (gFEX).

The ATLAS MUCTPI mentioned above is another project of the Phase-1 upgrade of the ATLAS trigger system. It uses the processor system of the SoC to run a run control application of the ATLAS experiment’s TDAQ (Trigger and Data Acquisition) system. Different to the detector control system, the TDAQ system is not concerned about hardware data like temperature and voltages, but rather about controlling and monitoring the functioning of the trigger and readout system. Cross-compilation is needed, because the processor system of a SoC is based on a computer architecture (ARM) different from most of the online and offline computers used in the experiment (x86_64). A part of the TDAQ software and all the software describing the board-specific functionality implemented in the programmable logic and the workload FPGAs was cross-compiled in order to run on the processor system of the SoC. This allows the MUCTPI to be integrated into the run control system of the ATLAS experiment.

Not only the experiments but also other groups at CERN are already using or planning to use SoCs. The CERN radioprotection group are deploying today almost 200 of their Radiation Monitoring Electronics (CROME) systems using a SoC in order to protect people and equipment at CERN from radiation. In addition, the CROME systems are now also being used by the European Spallation Source (ESS). In the Accelerator and Technology Sector there are several projects under way, which are using SoCs. An overview of those projects was also presented during the SoC workshop.

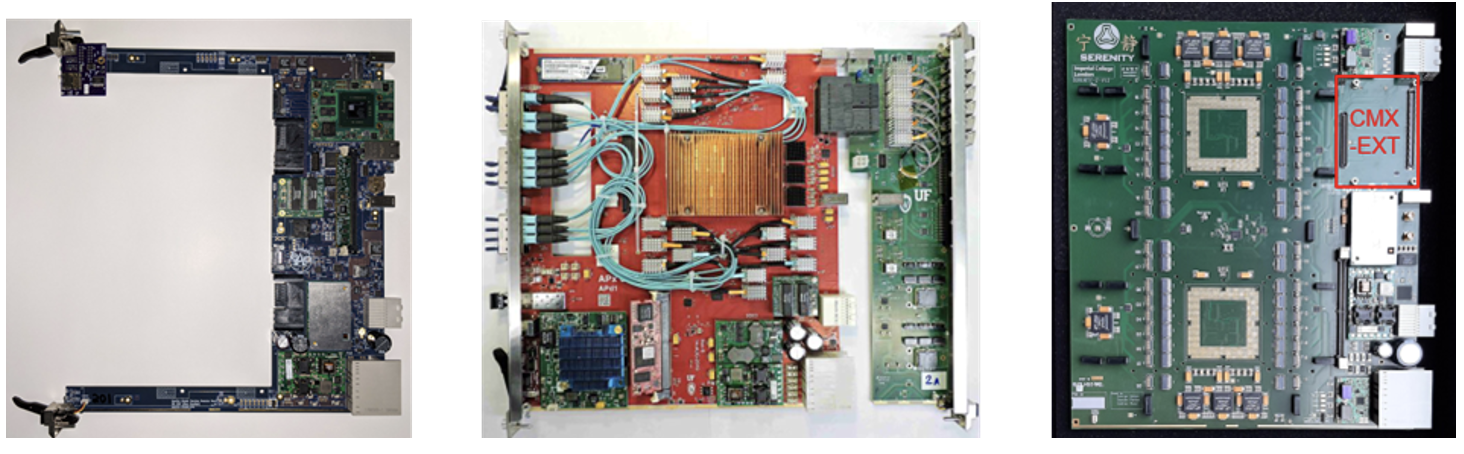

While the Phase-1 examples presented above were from the ATLAS experiment, which had decided to upgrade some of its electronics during the current long shutdown (LS2), the CMS experiment had already done the Phase-1 upgrade of the trigger and part of the readout electronics in the previous long shutdown (LS1), before the SoCs became widely available.But history is far from stopping here: the CMS and ATLAS experiments are now heavily engaged building Phase-2 detector upgrades for the next long shutdown (LS3) starting in 2025. A number of back-end electronics projects are on their way, which will be built using the ATCA standard for modular electronics. A number of ATCA platforms are being provided for the sub-detector electronics. Among those platforms are the Apollo, the APx, and the Serenity projects, which provide common platforms for one or two high-end workload FPGAs. The Apollo and APx platform are using a SoC of the latest generation on a mezzanine card, a so-called System-on-Module (SoM). The Serenity started with an alternative way of control using a COMExpress card with an Intel x86_64 processor on it and is now also providing a version, in which a mezzanine card with an SoC is used for control.

Figure 5: Apollo service module with SoM in right-hand corner (the command module was removed); APx Module with a custom SoC (and a rear-transition module); Serenity Module with the space foreseen for a COMExpress or SoM Module.

Figure 5: Apollo service module with SoM in right-hand corner (the command module was removed); APx Module with a custom SoC (and a rear-transition module); Serenity Module with the space foreseen for a COMExpress or SoM Module.

It is foreseen that ATLAS and CMS, will integrate both, 1500 such boards, each with at least one SoC, in the upgraded experiments for Run 4 of the LHC. With such a large number, the aspect of administration of the SoCs in the experiments becomes crucial. In consequence, a good part of the presentations and the whole last day of the SoC workshop were dedicated to discussion on system-administration aspects. Providing a common operating system, which satisfies the network security standards, as well as network services, reliable booting of the systems, and rollout of new versions of firmware and software were discussed. In collaboration with the CERN IT Linux team and CERN IT security, solutions are being developed that will provide a common framework for SoC in the ATLAS and CMS. In that sense, the current upgrade projects are being used as pioneers to study future use cases and their experience will be used to define the requirements for the next upgrade.

Outlook

All the examples above and what was presented during the 2nd SoC workshop show that SoCs are very useful elements used in a large number of current and future electronic boards for particle physics experiments. With the increasing number of developments and use cases, another workshop on the SoC is foreseen to take place in a year or two from now, with the aim to bring together again both, experts and users interested to share their experience and to provide a forum to make the most out of those chips.

Members of the SoC Organizing Committee:

*Ralf Spiwoks (CERN), Marc Dobson (CERN), Revital Kopeliansky (Indiana University), Frans Meijers (CERN), Diana Scannicchio (University of California, Irvine), Mamta Shukla (CERN)