Data handling and processing for the FCC-ee

Data handling and processing for the FCC-ee

Introduction

The integrated Future Circular Collider study will bring about giant steps forward in many technical and engineering fields. The operation at the Z-pole of the FCC-ee machine will deliver the highest possible instantaneous luminosities ever achieved with the goal of collecting the largest Z boson datasets (Tera-Z), and enable a programme of Standard Model physics studies with unprecedented precision. The challenges of having an adequate software ecosystem [1], adequate software tools for the optimisation of the Machine Detector Interface (MDI) region [2], of trigger and data acquisition, and of off-line data processing and storage, are being addressed within the FCC feasibility study, which focuses on the first stage of the project, the e+e- collider FCC-ee. In this article we overview the current status of these studies and the next steps ahead. The article is largely based on recently published reviews [1,2,3,4].

Key4hep, the common software ecosystem for future experiments

The interplay between reconstruction algorithms and detector geometry, for example in particle flow clustering, implies that the detector hardware cannot be developed and designed independently from the software. Software ecosystems are at the core of any modern HEP experiment, providing seamless integration and optimization between the several software components aimed at facilitating data processing.

The turnkey software stack, Key4hep [5], aims to create a complete data processing ecosystem for the benefit of HEP experiments, with immediate application to future collider design and physics potential studies. The new ecosystem is being built on established solutions such as ROOT [6], Geant4 [7], DD4hep [8] and Gaudi [9], and features a new event data model EDM4hep [10] based on Podio [11].

The main components of Key4hep are:

- A data processing framework, which provides the structure for all dependent components.

- A geometry description tool; for this purpose all of the communities concerned already use DD4hep which offers a complete detector description for simulation, reconstruction and analysis.

- A common event data model.

- A common build infrastructure.

Key4hep already provides enough components and modules to enable the migration of existing frameworks based on Gaudi, such as FCCSW, the software ecosystem used for the studies described in the FCC CDR, published in 2019.

In Key4hep, the common event data model EDM4hep defines a common language underlying the inter-operation of the various components. The core component k4FWCore provides the interfaces and the data service, and is largely based on the corresponding components in FCCSW. EDM4hep [10] and k4FWcore enabled the development and integration of data processing components, in the form of Gaudi algorithms and tasks. For example, Delphes-based [12] fast simulation workflows, intensively used during the current phases of FCC-ee physics performance studies, are provided by the k4SimDelphes module, which was derived from as similar component of FCCSW. k4SimDelphes provides a Key4hep interface to Delphes both in terms of stand-alone applications, running on top of any of the input formats supported by Delphes, and of Gaudi algorithm.

As mentioned above, the needed FCC software (FCCSW), has a lot of commonalities with Key4hep, stemming from the fact that they are both based on Gaudi [9]. The main differences are the event data model and the structure governing the components, which in Key4hep is flat while FCCSW features a hierarchical structure with sub-components organised in categories. Moving FCCSW to Key4hep means using common components available from Key4hep as much as possible, but FCC-specific components will be kept under FCC responsibility.

Further completing the ecosystem to match the needs of the community for the FCC Feasibility study consists, firstly, of identifying the missing pieces in the findings of the other FCC working groups, and, secondly, of creating the appropriate conditions for the provision of the required software modules. This applies in particular for the Physics Performance and Detector Concepts groups, where the current priority is to adequately support detector concepts with the required level of simulation and reconstruction. An infrastructure supporting the possibility to easily interchange sub-detector components is required by these groups for a broad investigation of multiple solutions. The DD4hep and Gaudi frameworks chosen for detector description and workflow execution support this goal through a plugin system which allows exchanging of both sub-detectors and workflow components at runtime. Finally, the complete ecosystem will include a set of interfaces to external packages which are typically being developed as part of general purpose R&D programmes. One of the main challenges affecting the integration process is ensuring that the event data model contains all of the information required.

The status of the FCC software with respect of simulation and reconstruction has been one of the main topics during the 5th FCC Physics workshop that was held in February 2022. The Software & Computing, Physics Performance and Detector Concepts groups have organized a joint session to take stock of what is available and give guidelines on how to continue the required software developments. The sessions included talks about the status of the software for CLD, the FCC-ee version of the CLIC detector, the IDEA detector concept, mostly developed by Italian groups, and the lessons learned by developing the software for a liquid Argon calorimeter for FCC-ee. The latter has been combined with the simplified version of the IDEA tracker demonstrating the potential of the sub-detector interchange idea which FCC would like to streamline.

The Key4hep projects seem to be on the right path to find the equilibrium to serve the needs of the participating projects well, with the appropriate balance between common infrastructure and project-specific developments, and a clear path to promote the latter to the common repositories. The challenge ahead is to maintain the current momentum and to create the conditions to attract and support people who will develop and implement their tools in the Key4hep context, thereby creating a critical mass of the best tools to cover most of the needs of HEP experiments. This task includes the provision of sufficiently extensive documentation, training and adequate advertising. Ultimately this will depend on the capability of guaranteeing an adequate workforce and funding.

The FCC Study is building a framework which will facilitate the design and implementation of on- and off-line software systems as well as accelerator and experiment components. This ecosystem will be of use to the whole of the HEP community, not just for FCC, and it will facilitate collaboration across the world. Below we will briefly discuss the key components of the new framework.

Machine Detector Interface

The interaction region design for FCC is particularly challenging and requires a combined optimisation of accelerator, engineering and experiment aspects. The interplay concerns many aspects including,

- The beam pipe aperture has to be sufficient to allow the beam to pass in all circumstances (injection, collision etc.) whilst at the same time allowing the detector to be as close as possible to the interaction region

- heating of the accelerator and experiment structures from higher order mode losses (caused by the interaction of beam’s intense electromagnetic fields and surrounding components)

- beams losses and synchrotron radiation

The existing accelerator codes are the result of many years of development and have been validated with respect to various accelerator facilities. Often two or more alternative codes exist for each of the different operational and experimental aspects. The challenge in these cases is to have full control of the codes, often not available in version repositories. A toolset known as MDISim is being developed which combines the existing standard tools, mad-x, root and Geant4 and this will allow:

- Reading of a machine lattice description, generating Twiss, survey and geometry files

- Visualisation of the geometry and analytic estimates including calculation of synchrotron radiation

- Detailed simulation of the passage of particles through materials.

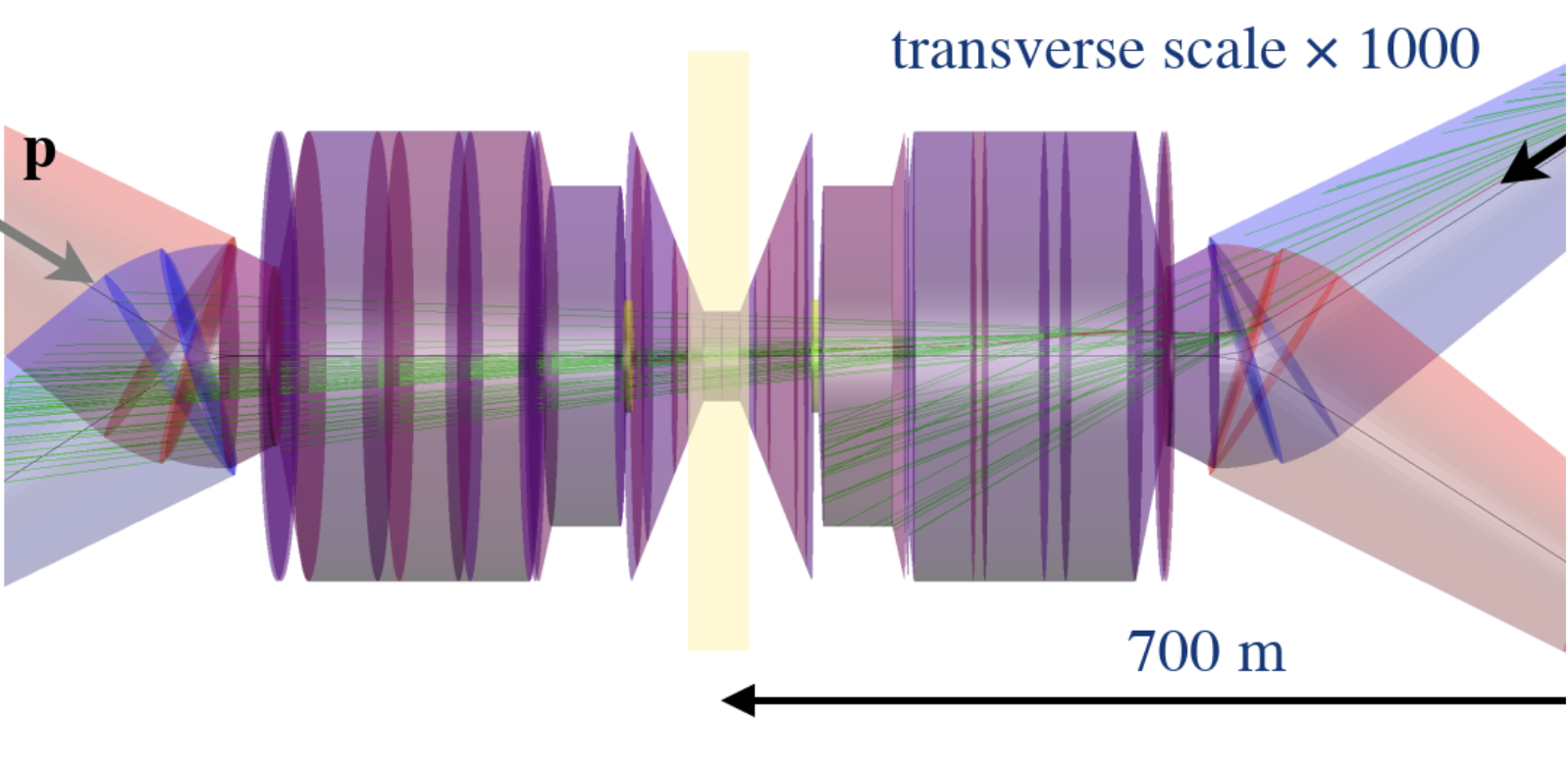

A typical example of the integration of accelerator and experiment codes. It shows the results for the FCC-hh interaction region using root, and interfacing the mad-x generated machine layout with MDISim to Geant4 to track the protons through the interaction region and generate synchrotron radiation photons.

The objective of these activities is to facilitate access and configuration and, when relevant, provide clear and solid ways of combining the results. For the integration of the MDI codes with experiment software, the main challenge is to provide the relevant Gaudi components to enable the interplay between accelerator codes and the data processing chain based on FCCSW.

The description of the geometry and materials of accelerator and detector elements is a crucial ingredient for most of the codes concerned. Having a coherent description, based on the same source of information is highly desirable for several reasons, not least to reduce the risk of errors due to several implementations of the same item. Detector components are designed using an open source toolset introducing the compact detector description concept, provided through minimalistic XML formats, to allow composition of basic sub-detector elements to form complex detector structures. Accelerator components are developed using CAD engineering tools which allow working in 3D with the solid features which are essential for the task. To achieve the objective of a single geometry source (in DD4hep) for all the components, a solid conversion solution to and from CAD is required.

On-line

Trigger and data acquisition systems sustaining comparable data throughput rates to those of FCC-ee will already have been operational at the HL-LHC. The baseline assumption for future lepton collider experiments is that they will rely on a software-only triggering system (triggerless selection) with ~100% efficiency and built-in redundancy. It is also assumed that all the data for interesting physics can be digitally stored and that the beam background will not affect the DAQ. The possibility of using an ultra-light tracking detector, such as a time projection chamber (TPC), in a triggerless system requires the management of the very large out-of-bunch pile-up and its operation in continuous mode. Complete timing information of detector hits is expected to be fully exploited in the TDAQ of FCC-ee experiments because it will be needed for calibration, reconstruction and in searches for new exotic particles.

On the hardware side, R&D activities are leading to improvements in sensor performance, reductions in materials budget, lower power electronics and wireless data transmission.

As long as the execution of the software-based on-line selection algorithm remains within the processing time budget it will be possible to increase complexity by combining information from various systems. It is known that it will be important to be able to identify and track long lived particles (LLP) and this may require additional detectors to be integrated with the TDAQ. This may mean that a readout segmentation lending itself to LLP triggering will be required.

Current R&D activity is focusing on the deployment of machine learning technologies which have a more limited computing footprint in both off-the-shelf commercial processors and FPGAs. Some examples include: front-end data compression, particle identification with multivariate classifiers, pattern recognition, tracking and reconstruction with neural networks and regression for improved resolution. It is widely anticipated that some of these innovations will soon find their way into TDAQ systems.

Off-line

The off-line workflow typically comprises collision data reconstruction and analysis and Monte Carlo simulations. The computing model for FCC is based on the FCCSW framework, data at any level are described by the data structures provided by EDM4hep [11].

In spite of the large data volumes, the requirements for storage and computing resources are not expected to pose problems during the operation of the machine. For the Z run the raw data storage requirement is expected to be 3-6 EB, corresponding to 15-30 PB for analysis data (AOD), which is of the same order as expected at the HL-LHC. Given the timescale for FCC-ee, there is the possibility of benefiting from all the advances, developments and findings of HL-LHC, including the resource sustainability aspects.

The preparation of the FCC Feasibility Study Report for the next European Strategy Upgrade is potentially challenging in terms of computing resources and will require the experiment groups to develop ways to use and manage the available data samples in an optimum way, de facto increasing their statistical power and reducing the effective resource needs.

References

[1] G Ganis, C Helsens, V Volkl, “Key4hep, a framework for future HEP experiments and its use in FCC”, Eur. Phys. J. Plus 137 no. 1 (2022) 149, https://doi.org/10.1140/epjp/s13360-021-02213-1

[2] M Boscolo, H Burkhardt, G Ganis, C Helsens, “Accelerator-related codes and their interplay with the experiment's software”, Eur. Phys. J. Plus 137 no. 1 (2021) 28, https://doi.org/10.1140/epjp/s13360-021-02212-2

[3] R Brenner, C Leonidopoulos, ”Online computing challenges: detector and readout requirements”, Eur. Phys. J. Plus 136 no. 12 (2021) 1198, https://doi.org/10.1140/epjp/s13360-021-02155-8

[4] G Ganis, C Helsens, “Offline Computing resources for FCC-ee and related challenges”, Eur. Phys. J. Plus 137 no. 1 (2021) 30, https://doi.org/10.1140/epjp/s13360-021-02189-y

[5] See, for example, P Fernandez et al., “Key4hep: Status and Plans”, EPJ Web of Conferences 251, 03025 (2021), https://doi.org/10.1051/epjconf/202125103025

[6] R Brun et al., “ROOT: A C++ framework for petabyte data storage, statistical analysis and visualization”, Comput.Phys.Commun. 182 (2011) 1384-1385, https://doi.org/10.1016/j.cpc.2011.02.008

[7] See, for example, J Allison et al., “Recent developments in Geant4”, NIM A 835 (2016) 186-225, https://doi.org/10.1016/j.nima.2016.06.125

[8] M Frank, F Gaede, M Petric, A Sailer, “AIDASoft/DD4hep”, 2018, http://dd4hep.cern.ch/, https://doi.org/10.5281/zenodo.592244

[9] G Barrand et al., “GAUDI—A software architecture and framework for building HEP data processing applications", Comput. Phys. Commun. 140 (2001) 45-55

[10] F Gaede et al, “EDM4hep and podio - The event data model of the Key4hep project and its implementation”, EPJ Web of Conferences 251, 03026 (2021), https://doi.org/10.1051/epjconf/202125103026

[11] Podio, https://github.com/AIDASoft/podio

[12] The Delphes 3 Collaboration, J de Favereau et al., “DELPHES 3: a modular framework for fast simulation of a generic collider experiment”, JHEP 02 (2014) 057, https://doi.org/10.1007/JHEP02%282014%29057