NextGen Triggers: Pioneering R&D for HL-HC Data Acquisition and Event Processing

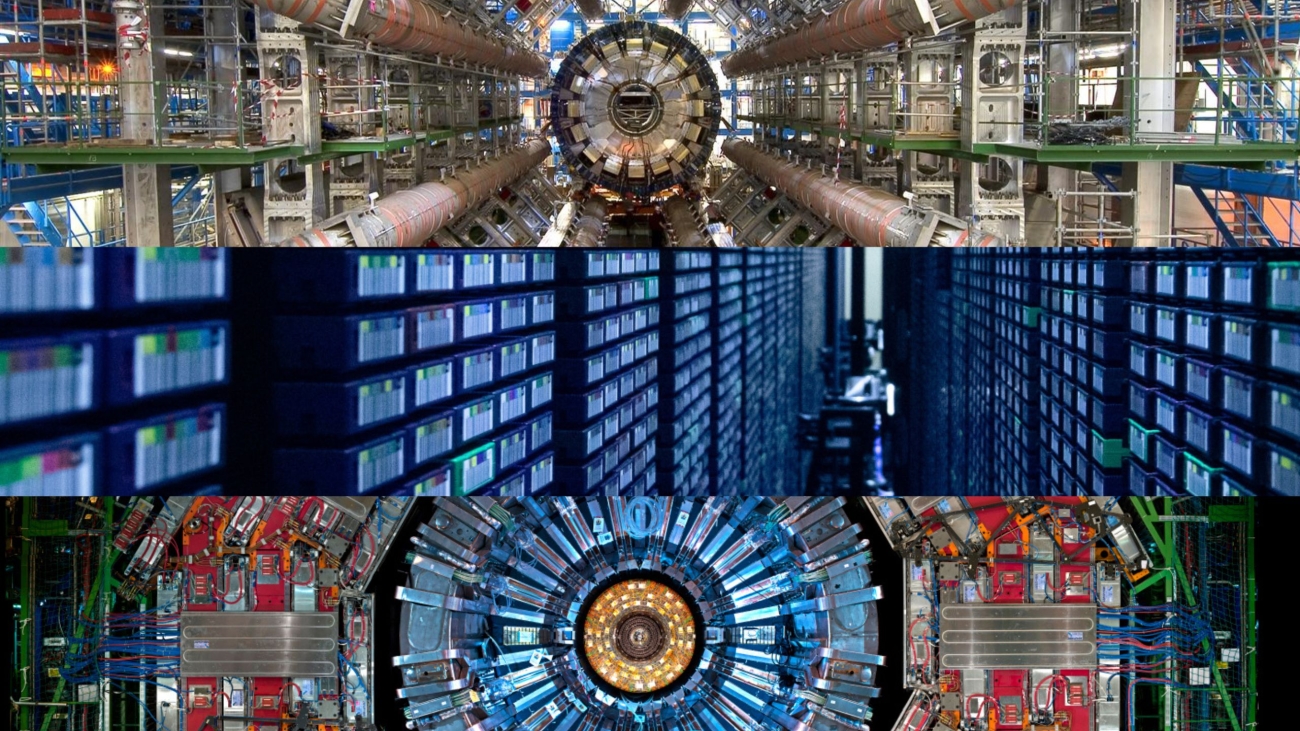

The High-Luminosity LHC upgrade will provide an unprecedented challenge to the data acquisition and online event processing capabilities of ATLAS and CMS. Both experiments are currently undergoing major upgrades to prepare their detectors to cope with the expected threefold increase in event level pile-up interactions. The upgraded detector subsystems will feature increased granularity, novel detector technologies including precision timing capability and will allow to increase the data rates to be handled by the data acquisition systems and to be processed online for event selection by up to an order of magnitude. It is the goal of the NextGen Triggers (NGT) project, generously supported by the Eric and Wendy Schmidt Fund for Strategic Innovation, to foster R&D on future data acquisition strategies, on innovative online computing technologies, on novel machine learning approaches to trigger event selection. The novel online data processing technologies and event selection strategies will allow ATLAS and CMS to fully exploit their physics potential in the High-Luminosity LHC area.

The foundations of the NextGen Triggers project were laid in 2022 when a group of private donors, including former Google CEO Eric Schmidt, visited CERN and the ATLAS experiment. They expressed their eagerness to contribute to the R&D to address the online computing challenge and to promote the necessary technological innovation in the coming years. This first visit evolved into an agreement with the Eric and Wendy Schmidt Fund for Strategic Innovation, approved by the CERN Council in October 2023, to fund the Next-Generation Triggers (NextGen) project. The agreement outlines a comprehensive five-year programme of innovation that will extend the ATLAS and CMS baseline upgrade projects with a comprehensive R&D programme that is required to develop new technologies and to reach the ambitious goals.

The project is structured into four work packages, each to cover a specific aspect of the programme. The scope of work package 1 is on common R&D projects that span even beyond the direct needs of ATLAS and CMS. It covers R&D on computing and Machine Learning technologies like HLS4ML to bring AI algorithms onto FPGAs, on hardware aware Neural Network optimisation, or on Quantum Tensor Networks for simulation of quantum many body systems. Theoretical work on Monte Carlo generators and on higher-order calculations in particular for exotic models of new physics will be covered by this work package, as well as R&D on technologies for the integration of accelerators in the experiment’s software frameworks. The funding available for this work package will also be used to install state-of-the-art Machine Learning training hardware and High-Performance Computing infrastructure for theoretical calculations at CERN.

Work package 2 covers the R&D programme for the ATLAS experiment. The goal is to develop novel Machine Learning algorithms for deployment on the FPGA broads of the new Level-0 hardware trigger system. Those algorithms aim at exploiting the full granularity information of the ATLAS calorimeters to boost the performance of the jet reconstruction and identification. Machine Learning approaches will also be used to reconstruct track segments in the Level-0 system from the detailed detector information of the Muon Spectrometer in regions with reduced RPC coverage. Also within the scope of work package 2 is the optimisation of the ATLAS readout system, to ensure that the additional data can be shipped to the Event Filter computing farm for further processing.

The main computing bottleneck for the event reconstruction in selection in the Event Filter farm is the charged particle track reconstruction for the Phase-II ATLAS Inner Tracker and for the Muon Spectrometer. Work package 2 will foster the R&D on ACTS-based tracking software infrastructure for both, for CPU-based reconstruction and for using in particular GPU, but also FPGA based accelerators in the ATLAS farm. Novel Machine Learning approaches, like e.g. Graph Neural Network based track finding strategies, will be studied to reconstruct the complex high pile-up events in the ATLAS Phase-2 Inner Tracker and in the Muon Spectrometer. The final aim of work package 2 is to exploit the physics potential provided by the novel Level-0 and Event Filter reconstruction techniques to develop new trigger event selection strategies that will allow boosting the sensitivity of ATLAS for exotic, non-standard, as well as complex final states.

Work Package 3 focuses on enhancing the CMS Online Selection and Data Scouting workflows for HL-LHC by ambitious R&D for the L1 Trigger (L1T) and High Level Trigger (HLT). For the L1 Trigger system, machine learning inference will be deployed in the firmware of FPGAs, AI Engines, GPUs to improve physics objects reconstruction (such as vertexing, particle flow, jet tagging) and to implement supervised and unsupervised event topology selections. On one side, improvements on algorithms that fit within the fixed L1T latency can allow expanding the physics acceptance of the standard data-taking workflow with a maximum bandwidth of 750 kHz. On the other hand, ML algorithms can be used for Data Scouting to enhance the reconstruction performance for low-energy objects with high background and to power complex combinatorics calculations for signatures with multiple objects. To profit from these opportunities, the core of the project is to develop the prototype hardware architecture and the algorithms of a real-time analysis facility that would be operated at 40 MHz, and analyse all collision events. A test stand will be set up with FPGAs, servers hosting different accelerators and high-speed optical networking, connected to new prototype L1 trigger boards.

For the High-Level Trigger system, the project aims to enable the full reconstruction and real-time analysis of all L1-accepted collision events. This goal requires a multi-pronged approach: developing optimal calibration procedures to compute in real-time the best calibrations for the online reconstruction; exploring various technologies to enhance the efficiency and resolution of the object reconstruction, bringing its quality in line with that of the offline reconstruction; and exploiting novel data compression techniques to reduce the on-disk storage requirements and support saving the full input event rate. By combining innovative, high-performance algorithms, optimized for heterogeneous efficiency, with the strengths of ML in pattern recognition, the project aims to develop robust, production-grade solutions that meet the stringent requirements of the CMS experiment. The goal is real-time, offline-like event reconstruction on all L1T-selected events without additional filtering before data storage.

The fourth work package of the NextGenTriggers project will provide training opportunities, including dedicated lectures and seminars on data acquisition, online data processing and computing technologies, and Machine Learning approaches to event reconstruction and trigger selection. Such opportunities will initially be developed having the training needs of the NextGen Triggers project in mind, but will be opened to the CERN community overall. Such training events may extend the established CERN programme of schools with a dedicated school focussing on the technological fields covered by the NextGen Triggers project. The work package will also cover the project requirements for outreach and knowledge transfer.

Subsequent articles in the EP newsletter, dedicated to the individual work packages of the NextGen Triggers project, will cover more detailed insights and first results

Acknowledgement: The authors of this article would like to acknowledge the WP leaders of the NextGen project for their valuable support and feedback.