The FELIX Project

If you are working on one of the ATLAS, DUNE or NA62 experiments you have surely participated to meetings in which people referred to “FELIX”, as if it were a widely-known brand. You probably guessed, after some time, that they were not referring to the 1919’s cat of the cartoon nor the latest social media; but what is it?

FELIX stands for Front-End LInk eXchange. It was devised within the ATLAS collaboration as a component of the Data AcQuisition (DAQ) chain. One side connects through optical links to the on- or near- detector electronics and to the timing and trigger distribution system. The other side, connects through standard switched network technologies to the rest of the DAQ as well as to the Detector Control System (DCS).

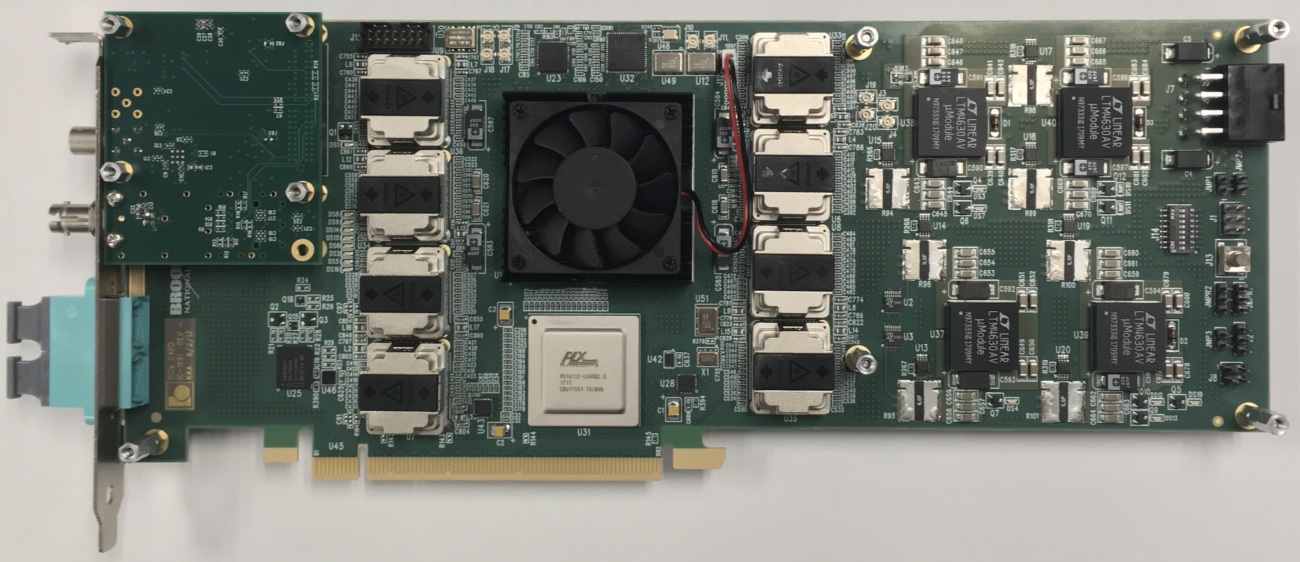

Figure 1: Simple sketch of the FELIX concept.

As the name indicates and as illustrated in Figure 1, FELIX transforms the flow of data that occurs on custom links, because of specific requirements of the detector electronics (e.g. radiation hardness) or time-synchronous signal transmission (e.g. timing, trigger and control) into widely used commercial data transfer technologies (e.g. ethernet) that servers and software can easily manage.

In the past, High Energy Physics (HEP) played a leading role, and was often alone, in developing custom digital electronics devices for detectors readout. The rise of computers, networks and compute accelerators in all socio-economic sectors pushed large industries to develop several solutions that can now be used by HEP to achieve its research goals. It is today very difficult for a semi-academic environment to keep up with the pace of development and component pricing that large industrial players can achieve. Thus, reducing custom electronics components, where suitable, also reduces the risk of running into obsolescence in the trigger, DAQ and DCS areas that evolve throughout the lifetime of an experiment.

In essence, the main reasoning for FELIX is that an early introduction of standard commercial components within the DAQ chain allows for minimizing any custom hardware development effort and for profiting from the fast-evolving information and communication technologies.

The initial concept was formulated as early as in 2012 and progressively attracted an international pool of engineers and physicists leading to the formation of the ATLAS FELIX team. The goal was to implement a first version of the FELIX system for the new ATLAS detectors of the Phase I upgrade and then switch the whole experiment to FELIX for the Phase II upgrade.

After an initial brain storming, it turned out that the most convenient way of implementing the FELIX (see Figure 1) consisted in exploiting the high speed interconnects of modern servers, such as PCIe, to connect a peripheral card managing the optical detector links with the server’s memory and CPU resources. The server dispatches data to and from this peripheral card via PCIe from and to other network endpoints (DAQ and DCS) via its network interface. To carry out its functions, i.e. drive the optical links, check, encode and decode data, the peripheral card hosts a FPGA.

The need for developing a custom PCIe card has been driven by the unavailability, on the market, of commercial cards supporting high link densities. There are more and more PCIe devices with FPGAs on board but, unfortunately, they provide few very high-speed links (25-100 Gb/s), which do not match the needs of detectors having many medium speed links (1-10 Gb/s) that can be heavily multiplexed: a single FELIX card typically operates 24 bi-directional detector links.

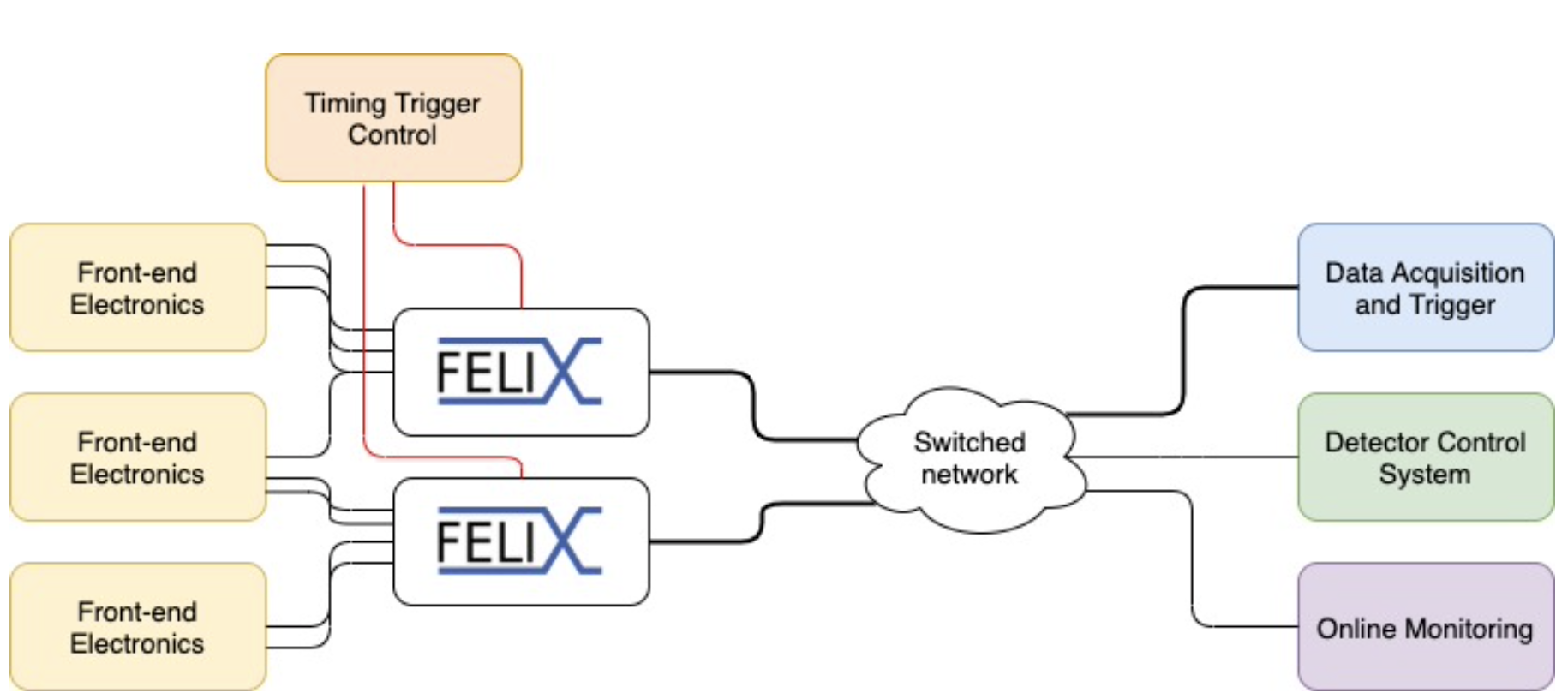

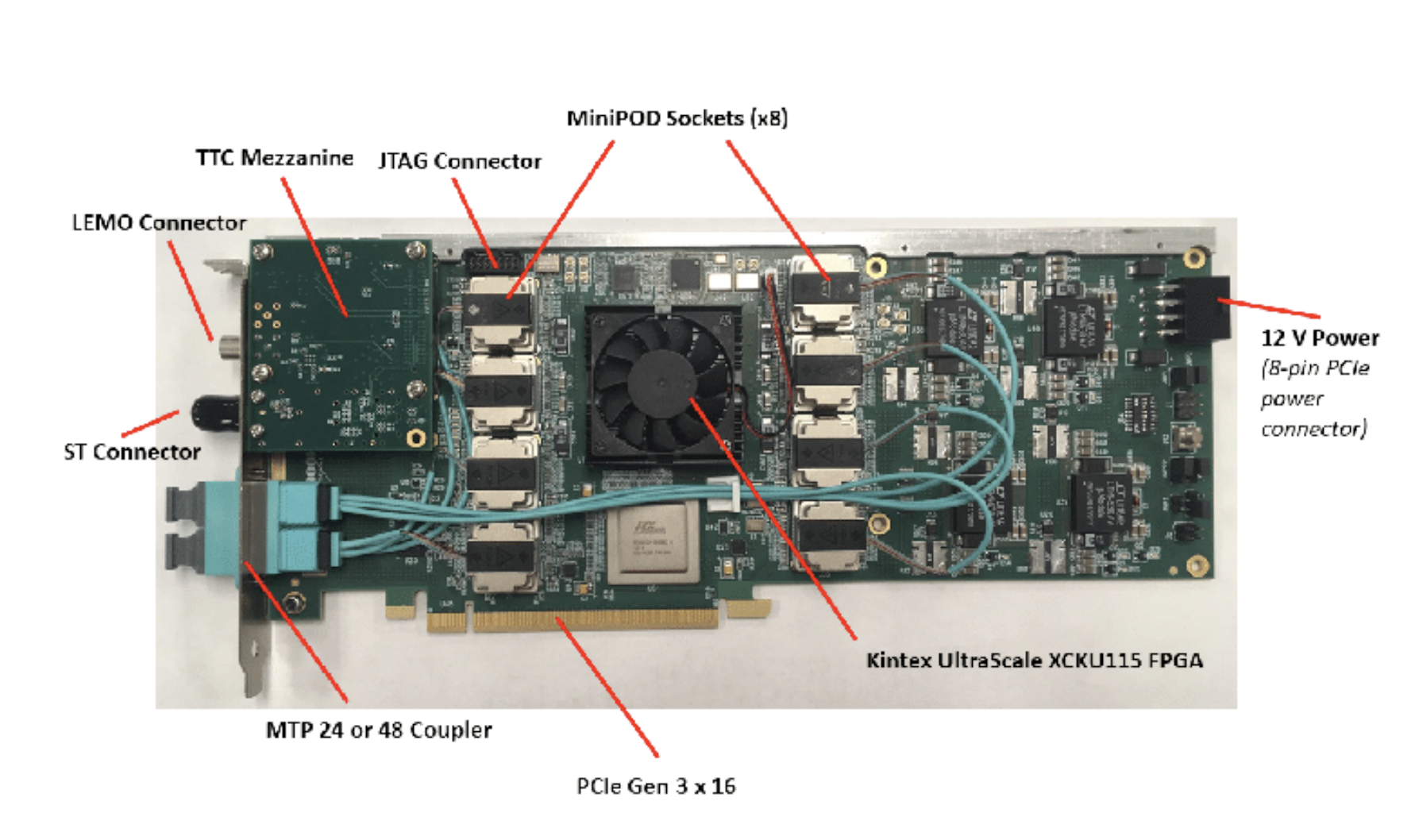

In its present implementation, a FELIX system consists of one or two custom FPGA-based PCIe cards (see Figure 2) hosted within a rack mounted server and equipped with a complete suite of firmware and software. The version of the card in use now is based on PCIe Gen 3 x16, with a bandwidth of 16 GB/s. Developments are ongoing to profit from the newer PCIe standards for FELIX (PCIe Gen 4 and Gen 5) in view of the Phase II upgrades and to allow for higher multiplexing of detector links, making the system even more cost effective. The CERN EP-ADT-DQ, EP-DT-DI and EP-ESE-BE sections are playing an important role in the FELIX project, in particular in the areas of software development, hardware procurement, PCIe cards production, as well as system installation and integration.

Figure 2: The FLX-712 PCIe card used at present.

Since its inception, the ATLAS FELIX team had the goal of serving the many sub-detectors that make up the experiment. Therefore, a generic design, encapsulating specific needs in well-defined areas of the firmware, was chosen. FELIX supports the GBT and lpGBT protocols, a simple serial 8/10 bit protocol (the so-called Full Mode) at 9.6 Gb/s and will, in future, support Interlaken (25 Gb/s). Due to this general approach, it became soon apparent that FELIX could cross the boarders of the ATLAS collaboration and become a building block for the data acquisition systems of other experiments.

The re-use of hardware components among experiments isn’t something new: electronics or even complete detectors have been recycled from one experiment to another in the past. But there are two particularities that are worth highlighting about what happened with FELIX: it started being used outside ATLAS before its development was complete, and it was taken as a complete turn-key ecosystem, with hardware, firmware and software.

The first experiment adopting the FELIX solution was ProtoDUNE-SP (NP04), one of the DUNE prototypes installed and operated in the Neutrino Platform at CERN. Interestingly, NP04 took Physics data already in 2018, more than three years before ATLAS, which effectively started data taking with FELIX for LHC-Run3.

The availability of the FELIX allowed for a very fast development of the DAQ system, demonstrating the triggerless concept proposed for DUNE: all data are transferred from the detector electronics to high-end servers, features are extracted from the data online (hit finding) and data selection algorithms use those features to reduce the volume of data sent to permanent storage. Conversely, the use of FELIX in a very challenging environment, with data arriving at 2 MHz and almost saturating the optical links, was a perfect stress-ground for FELIX itself during its development phase and allowed experts to identify bugs and enhance the performance and stability of the system: DUNE developers directly contributed to those improvements which were then fed back into the main development stream for ATLAS.

The integration of FELIX into NP04 demonstrated not only that the system was usable outside ATLAS, but also that its modular design was flexible enough to allow using only some of the features of the system, while customizing others. As an example, only data transmission from the detectors to the DAQ was exercised in NP04, and the firmware and software were extended for DUNE, to introduce data processing (hit finding) and buffering on the FELIX system, prior to transmission of a sub-set of data over the network.

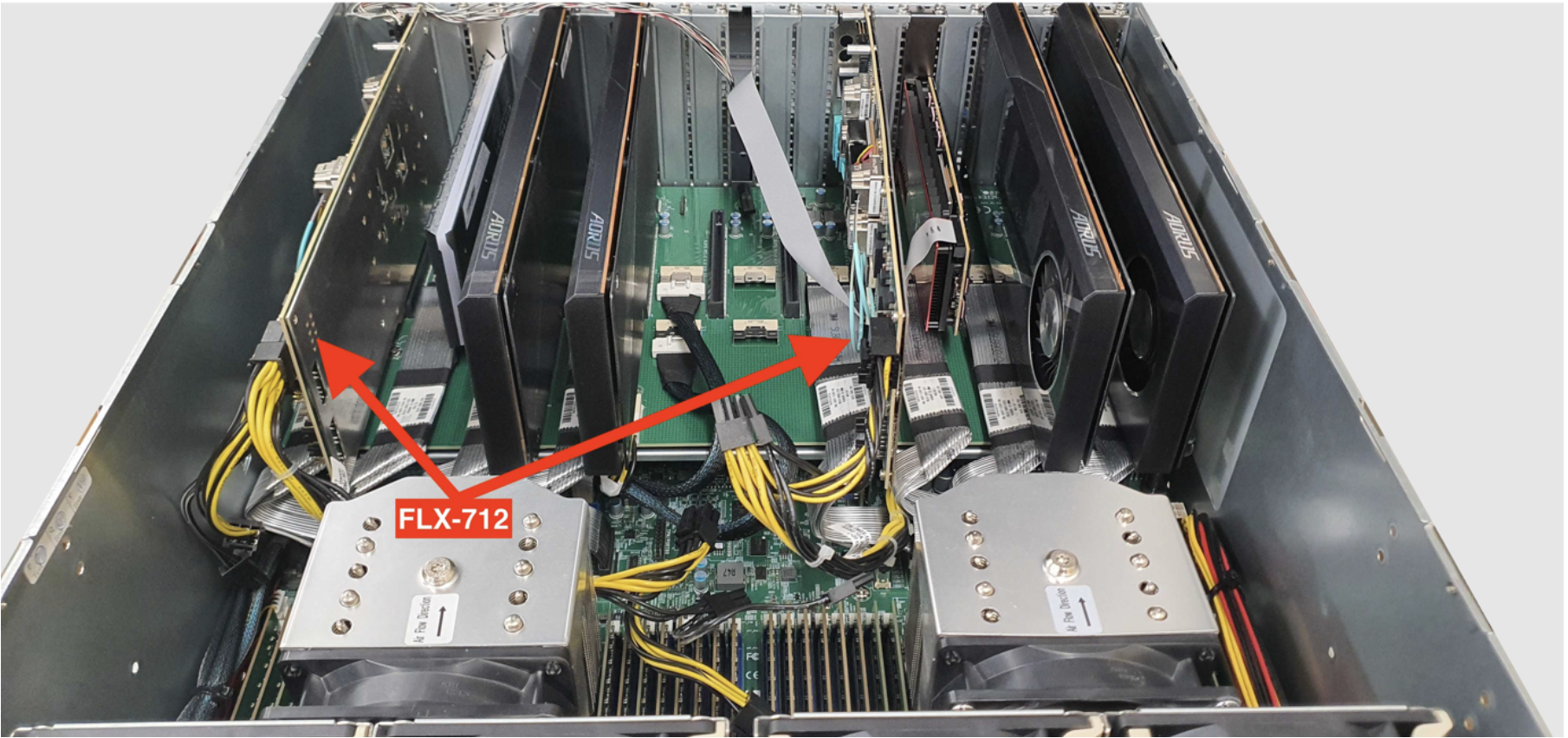

Figure 3: Inside view of a R&D server used for DUNE. Two FLX-712 cards are installed, alongside with high performance storage devices, and network interface cards. This configuration sustained a data throughput of 20 GB/s.

The next experiment at CERN that decided to use FELIX to validate the upgrade of its readout system is NA62. Again, for the FELIX team this is an interesting use case, since it explores a very different operational phase space compared to NP04. While in NP04 data arrive at a fixed rate and with a fixed size (full analog to digital conversion of signals at 2 MHz for all channels of the time projection chamber), testing the limits of the throughput that FELIX can support, in NA62 the new front-end electronics, developed within EP-ESE, carries out time to digital conversion of signals when a signal threshold is being passed and transfers data to FELIX using the Full Mode protocol. Data packets arrive at rates that vary widely depending on the particle flow intensity and that reach several tens of MHz. Data packets are small and with variable size. In addition, NA62 uses all the features of FELIX, with the links being used in both directions for both DAQ and DCS: timing, control and configuration information is transmitted to the front-end electronics, while Physics and monitoring data flow towards the FELIX.

Like for NP04, also in NA62 the software stack of FELIX was modified, to organize and buffer data on the FELIX server, and only serve data upon request of the downstream elements of the data selection and acquisition chain. For NA62 this was necessary since only some of the detector electronics is being upgraded, and thus the FELIX system must mimic the behavior of the existing readout system, for compatibility with the rest of the DAQ.

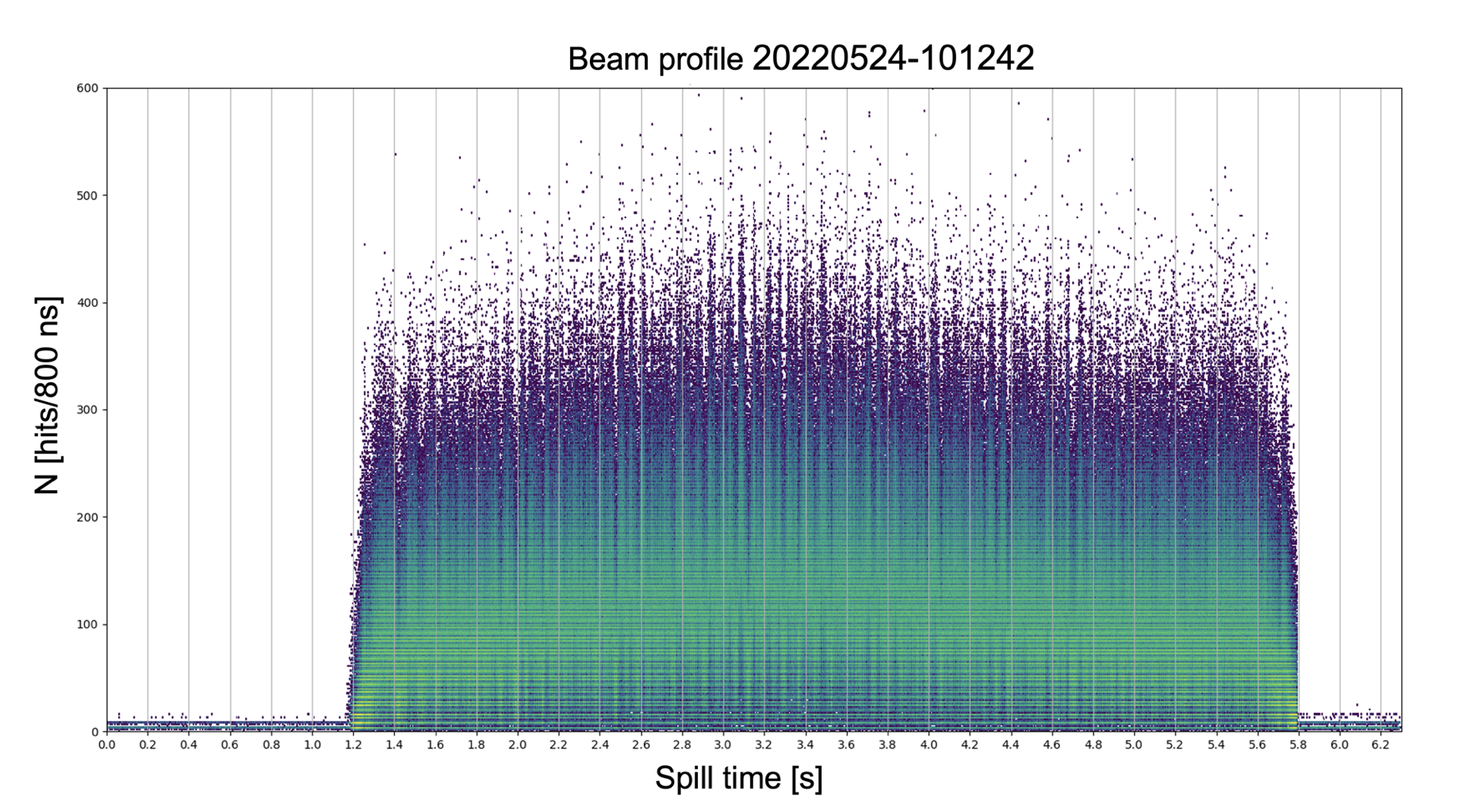

Figure 4: Beam activity as measured by the Veto Counter of NA62. All data of a spill are temporarily buffered in the FELIX server, allowing to observe the intensity of the particles flow without any trigger bias. This is a useful monitoring tool for the beam experts, allowing them to maximize the beam intensity while keeping a good uniformity along the whole spill.

The CERN EP-DT-DI section played a key role during the integration and optimization of the FELIX system into both NP04 and NA62.

The adoption of FELIX by those experiments was helpful to identify the main aspects required for making this technology accessible and appealing, possibly also beyond CERN:

- open access to software, firmware and documentation is crucial,

- a facilitated path for purchasing the custom hardware is indispensable, especially for small quantities.

While FELIX is already Open Source, a generic solution for hardware procurement beyond the large production batches planned and managed by ATLAS is still being studied. Moving to an Open Hardware approach may be a step into the right direction for the future, especially if commercial partners ready to produce FELIX cards are identified.

The choice of the ATLAS FELIX team to share their work with other experiments is an investment that so far has paid off. For this to be successful, it has been important to clarify the expectations of available support and development effort, as well as timelines for system availability and needs, with all interested peers. For the experiments at CERN, a small DAQ team in EP-DT, which by now has acquired an in-depth knowledge of the FELIX system and works in close contact with the ATLAS team, is acting as an intermediary to facilitate initial discussions with experiments and understand their needs, to help with system setup, commissioning, and tuning, and to minimize the strain on the ATLAS experts on support matters.

So, now you know what FELIX is! You may already have decided that you would like to use it within your experiment, or you may want to join the effort of facilitating the re-use of knowledge and tools within Particle Physics in these times in which re-focusing resources only on necessary developments is paramount. If that’s the case... contact us! (Giovanna.Lehmann@cern.ch)