Interview with Peter Shor

Peter Shor has carried out pioneering work in combinatorial analysis and the theory of quantum computing. He received worldwide recognition in 1994 when he presented a computational method for "factoring large numbers" which, theoretically, could be used to break many of the existing coding systems. The discovery of Shor’s algorithm along with his work on quantum error correction, helped boost the field of quantum computers to a new level. For his work he has received numerous awards including the Goedel Prize in 1999 by the European Association for Theoretical Computer Science and a MacArthur Fellowship. In 2017 he received the Dirac Medal of the ICTP and for 2019 the BBVA Foundation Frontiers of Knowledge Award in Basic Sciences. In this interview we discuss his first steps and later developments in quantum information theory, the future of quantum computing as well as the skills and qualities needed to tackle the challenges that the new quantum era brings to our societies.

Do you recall what motivated you to work on quantum information theory?

The first time that I distinctly remember listening about quantum computing and quantum information theory was during a presentation by Charlie Bennett at Bell Labs. The topic was the quantum key distribution algorithm that he had developed together with Francois Bessette, Gilles Brassard and Louis Salvail. During his talk, he showed us a device that he built/developed together with John Smolin. It was a working prototype of a quantum key distribution system and the first demonstrator of quantum cryptography!

Quantum cryptography, was considered for many years as science fiction beyond the reach of our technological capabilities. Despite this rather gloomy perception, in October 1989, the first experimental quantum key distribution system was successfully implemented by Charli Bennett, Francois Bessette and their colleagues. A few years later, in 1992, they published a paper [1] describing the design of the apparatus that they build and the software.

This ushered the experimental era of quantum cryptography and a marking moment in the development of quantum computing. However, I was still not thinking about entering/working in this field. The turning point for me was when Umesh Vazirani visited the Bell Labs to give a talk on quantum computing based on his work [2] with Ethan Bernstein. They had proven a number of interesting things about quantum algorithms; highlighting the speed of quantum computers and the fact that they are more powerful compared to classical probabilistic computers.

What was your first steps in quantum information theory?

I have to admit that I didn’t get very far until I read Dan Simon's paper [3] on the problem that was later named after him (i.e. Simon’s problem). Imagine that you feed a string of bits, like 001100, into a black box and get as output another string, for example 110011. Simon’s problem asks: does the black box, which is your quantum computer, give a unique output for every possible input, or do some inputs give a common output? Invoking the use of a quantum computer as a black box, Simon’s posed a computational problem that is answered exponentially faster on a quantum computer than on a classical one.

What Dan Simon did was to use the Fourier transform over the binary field to find the period of a function over a binary vector space. It was the first quantum algorithm showing an exponential speed-up compared to the best classical algorithm that exists to solve the same problem. We expected that quantum computers would be faster but it was the first time that someone proved that they would be exponentially faster.

Looking back to Simon’s work, the transformation that he used was a Fourier transform and that made me think how Fourier transformations could be applied in a useful way to allow us to solve the discrete logarithmic problem. Fourier transforms and the discrete logarithm problem are both concerned with periodicity, which was the key to developing my algorithm. I succeeded to solve the discrete logarithm problem over a number “n” for the special case of “n” being a smooth number. A “smooth” number is one with no large prime factors. A classical solution already existed. However, the quantum algorithm used completely different techniques than the classical one and thus allowed a generalisation to non-smooth numbers.

The next step was to think how this algorithm would work for arbitrary numbers and generalize it for prime factorization. The resulting quantum algorithm calculates the prime factors of a large number, vastly more efficiently than a classical computer. A digital computer is often believed to be able to simulate any physical computing device with an increase in computation time by at most a polynomial factor. Things change when quantum mechanics comes into play. The new quantum algorithm could be used for calculations with quantum states, exploiting the fact that we can have superpositions of quantum states.

Did you face it as a purely mathematical problem at that time or you were already thinking about its practical applications?

I followed a purely mathematical approach, thinking of quantum computing in terms of unitary transforms rather than qubits. One of the problems we were facing In 1994, was that we didn’t know how to deal with fault tolerance in quantum computers. This means that to do a factorization without any errors, you would need gates accurate to 1 part in 1010, an almost impossible limit! This challenge motivated me to think about a polynomial-time quantum algorithm for the factoring problem.

One way of thinking about quantum computing, famously introduced by David Deutch, claims that computations happen in the superposition of many worlds and it is this fact that gives you the computational advantage. An extension of this argument is that quantum computing proves the existence of many worlds as the only way to explain where these computations take place.

The problem with this approach is that if you assume that the computation is taking place in many parallel worlds then your quantum computer model is pretty much resembling a massive parallel computer. However, this is not how quantum computers will work. In a quantum computer you have to arrange your computation so that all the paths leading to the right answer interfere while all the paths leading to wrong answers destructively interfere. In this way you enhance the probability of getting the right answer. This is essentially Feynman’s path integral applied in the case of computational paths though this wasn’t either the approach that I followed in my work.

Coming back to your question, I knew that Fourier transformations could be used to find periodicity. Then I figured out how to do a Fourier transformation in a quantum computer and used the Fourier transform to find the periodicity which solved the problem.

Following this approach you come up with a polynomial-time quantum algorithm for the factoring problem. Why was the factoring result so surprising?

I think different communities found the factoring result surprising for different reasons.

Computer scientists thought that it was surprising because it violated the extended Church-Turing thesis. One of the foundational principles of the field is the Church-Turing thesis, which says that any function that can be computed can be computed by a Turing machine. The extended Church-Turing thesis says that anything that can be computed efficiently (i.e., in polynomial time) can be computed efficiently on a Turing machine. If quantum computers can factor large numbers efficiently, then they can do something that is not believed to be possible efficiently on a Turing machine. Even today, some computer scientists find the extended Church-Turing thesis so compelling that they don’t believe that quantum computers will be able to factor.

Cryptographers found the factoring results surprising because that meant that if you had a quantum computer, you could break the RSA [6] and Diffie-Hellman encryption schemes, which are based on factoring and discrete log, and on which much of Internet security currently depends.

Physicists found quantum computing surprising because it was a new and completely unsuspected use for quantum mechanics.

PC. Did you foresee that your algorithm for integer factorization could have any practical applications.

At that time, I didn’t think that there would be any practical applications of it due to the open challenges. It was demonstrated that you would need incredible accuracy to deal with the computational errors and the community believed that it would be impossible to build a quantum computer.

The first reason is related to the fact that redundancy, the main way in which classical computers correct errors, doesn’t apply on quantum computers. The second reason stems from Heisenberg’s uncertainty principle according to which every measurement disturbs a quantum system and as a consequence you cannot measure whether an error happened without disturbing the state of your computational system.

Following the introduction of my algorithm, I was confronted with these lines of objection which however led to the invention of the quantum error correcting codes.

PC. How familiar were you with quantum mechanics (QM) at that time and how important was to choose an interpretation of QM for your work?

The correct interpretation of QM was not of primary concern to me and I think it would be fair to say for many of my peers at that time. I took Quantum Mechanics courses as an undergraduate student which means that there was a time distance of about two decades between that time and the start of my work on quantum algorithms. Hopefully, I remembered enough Quantum Mechanics (laughs) and learned what was essential to develop quantum algorithms that were later demonstrated to work.

From left: David Deutsch, Charles Bennett and Peter Shor during the 2017 Dirac Medal Ceremony.

Following the development of your algorithm for factoring large numbers exponentially you soon started working on the idea of quantum error correction. What was the problem that you tried to address?

As mentioned, there was a broad view that quantum computers will not work because it would be impossible to handle errors from them. Qubits are delicate quantum systems that are prone to error and decoherence introduced by two distinct sources. First, from the fact that you cannot duplicate the information and secondly that due to the uncertainty principle you cannot measure the exact status of the quantum system.

Not being able to measure the properties of the quantum system means that there are certain actions that you cannot take on a quantum computer as you could do on a classical computer. For example, in a classical computer, you can record the state of the system over time and if there is a mistake happening later in time you can always go back to the piece of the computation that you recorded and proceed from there. There are other ways in which you can introduce fault tolerance on classical computers that do not apply on quantum computers.

While going through the literature I came across a paper by Asher Peres on simple quantum error correcting codes that protect against bit flip errors. It is based on repetition by replacing 0 with let’s say 000 and 1 with 111. The problem though is that if you try to use this code to recover from phase errors then it makes phase errors three times more likely. At that time I realised that there was a transformation in quantum mechanics that takes bit errors to phase errors. So you could use this to produce a code that protects against phase errors but not bit errors.

There is a technique in classical coding that is called concatenation where you can take two codes, one of size A and one of size B and stick them together to get a code of size AB. I realized that something similar could be applied on these quantum error correcting codes. So by combining these two 3 qubit codes using concatenation, you can get a 9 qubit code that protects both against bit and phase errors. That was the start of quantum error correction. This approach allowed to formulate a quantum error correcting code by storing the information of one qubit onto an entangled state of nine qubits.

The ability to use quantum-error correcting codes, adds to the robustness of quantum information and offered a response to the criticism that quantum computers would never be able to perform large computations because you couldn’t correct errors. Quantum error correction allows us to maintain information stored in qubits (two-state particles) despite the environmental noise and without interfering with the computation. They can be seen as subspaces of the qubits' state space with the property that an initial state in this subspace can be recovered if sufficiently few of the qubits have experienced errors.

Do you think that the quest for quantum computers can teach us something about fundamental physics?

In my view, there are two ways that you may learn something about fundamental physics from quantum computers.

On one hand, there are a lot of physics experiments that classical computers cannot do as simulating a physical system of n particles requires 2n memory and is computationally very intense and expensive. This touches on Feynman's famous question, while he was teaching around 1981 a course at Caltech on the “Physics of Computation” about how one could simulate quantum mechanics. Richard Feynman’s observation that certain quantum mechanical effects cannot be simulated efficiently on a computer led to speculation that computation in general could be done more efficiently if it used these quantum effects. A quantum computer offers a big advantage as it could simulate this system with only a handful of qubits, maybe 10n or nlogn. In that sense, quantum computers would allow us to run simulations that are currently prohibited with classical computers and thus gain deeper insights on the basic theories describing nature.

On the other hand, If your question is whether we can learn something new about fundamental physics through the process of building a quantum computer then I am afraid that the answer is no. I believe that the only thing that quantum computers can tell us is that quantum mechanics works; something that we already know.

Do you follow the ongoing developments in the race to achieve quantum supremacy? Are you optimistic?

I think that quantum supremacy has been demonstrated though it is still too noisy to use it for any practical applications. I recall that twenty years earlier, there were two schools of thought: the pessimists claiming that you will never get quantum computers and the optimists arguing that we will get one within the next decade. It turns out that the truth is somewhere in between though probably the pessimists were somehow a bit too pessimistic (laughs!).

I hold a certain optimism, though it is still not entirely clear whether we will run against another fundamental obstacle in building quantum computers because we can’t figure out all the details of their architecture. In addition, we know that we would need much better quantum gates allowing us to do anything useful with quantum computers. If we keep progressing at the same speed, and I don’t see why this shouldn’t be the case, we should have one within the next two to three decades.

How did you feel when in the early 2000s you witnessed the application of your algorithm on a 7-qubit NMR device? Could you tell us a bit more about this experiment/computation?

I was very happy that it worked, but I had some concerns about how broad the application is. NMR quantum computing is inherently unscalable. In fact, a working NMR computer larger than seven qubits is probably never going to be built. The chemical that was used in the experiment was hand-synthesized by an IBM chemist on his personal time (although he used the IBM labs to do it), and it took him several months. When Ike Chuang [4], who had run the experiment, came to MIT, MIT arranged to buy the sole small vial of this chemical from IBM for an impressively large sum of money.This enabled him continue performing NMR computations.

Today, we have superconducting technology which supports 60 qubits, but for which there is no inherent scaling limit, so companies are already planning 100-qubit quantum computers, and thinking about building quantum computers with thousands of qubits further in the future.

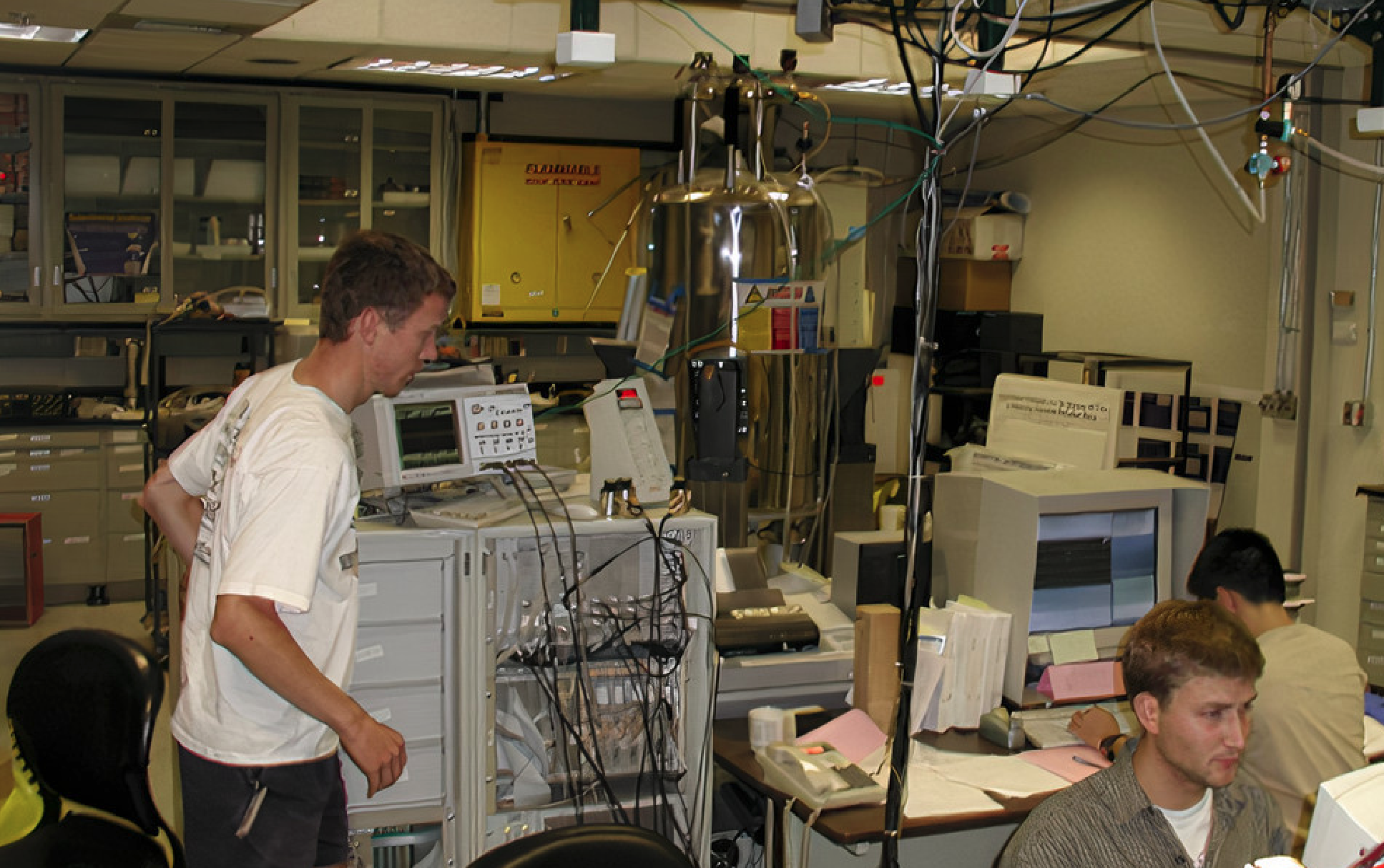

Experimental system used to realize demonstration of Shor's quantum factoring algorithm with a seven-qubit NMR-controlled molecule, at the IBM Almaden Research Labs, circa 1999. Photo taken during the course of the experiment, showing Lieven Vandersypen (left) and two summer intern students.

How would you describe the relation between theory and technology in driving developments in the field of quantum information? Has the balance between the two shifted over the years?

Perhaps one should distinguish two types of theorists who work in our field. One group are those who are concerned with advanced theoretical problems lying far ahead from our current technology. There is also a second class of theorists working around the best use of existing technologies. This is an equally challenging process, during which you often come up with very interesting new theoretical questions that you haven’t previously thought of and which guide the progress in the field.

One of these questions is how can we improve the quantum error correction algorithms based on the existing technology? One potential answer is to think of codes that do not work at the scale of qubits but at the scale of devices. A quantum device is a specific state of an harmonic oscillator and this state can be protected against errors that you can induce and test the outcome - or at least come closer to the ability of testing. Theoretical developments on quantum error correction also drives those designing the devices and how they think about the next implementation of such a system. The history of the field shows that theory development and instrumentation (in the form of quantum computer device building) go hand in hand. In that sense, it is quite possible that some future theoretical discovery will give us a new, better route to quantum fault tolerance that will be taken into account in designing future generation devices.

What are you currently working on?

Currently, I am working on a number of mathematical problems on quantum information theory. One of the crucial questions in our field is how much information we can send over a quantum channel and today we have some good ideas how to answer that. Suppose now that you also introduce a feedback channel from the receiver to the sender that sends classical information. This scenario doesn’t help theoretically for the classical channel but it helps for a quantum channel. It introduced a quantum capacity with feedback and we still don’t have a formula to describe it. We only have some bounds and thus with my colleagues we try to improve them.

Quantum computers could also compromise popular public key cryptography schemes that are based on prime factorization of large numbers - that can be surpassed by quantum algorithms. The RSA, the public-key cryptosystem widely used for data transmision which is what keeps all the information on the internet safe could be easily broken. I should clarify that we are still a long way from being able to break codes. Even when the first quantum computer arrives it will be able to break something like 10 RSA keys per day so it shouldn’t be a concern for your private data. Quantum mechanics change the nature of cryptography fundamentally and this means new ways to break protocols but also change the design of protocols that shall be different from what we could do with classical coding. One of them is quantum money. This has nothing to do with real money as you may imagine. It refers to quantum states that can be both verifiable and not copyable. Of course quantum states are unique by their nature but most of them cannot be verified. Moreover, if you had a procedure allowing you to verify something it would mean that most probably you are also able to copy it. This is the challenge I am currently working on: ways of constructing quantum states that can be verified but cannot be copied. I think that I have a good idea for a technique based on learning with errors which could be used for quantum cryptography and is reasonably secure.

I understand that cryptography is one of the key applications of quantum computers but I am wondering if you envision other areas beyond that?

Quantum computings, as I have mentioned, allows for many useful calculations tackling a number of problems that we face today both in fundamental physics but also in fields of applied research. The main problem is that we don’t have good quantum algorithms. However, in my view, once we have quantum computers we will be able to develop experimentally more and better algorithms paving a new era of quantum algorithm development.

How can we prepare for the post-quantum world?

In my view, an urgent task is to replace all our conventional cryptography with one resistive to quantum computers. Recently, the US government called for quantum resistant cryptosystems proposals meeting certain standards. Once a decision has been made we would need a massive amount of programming to adapt all the cryptosystems to post-quantum cryptosystems. This will be a lot of work in terms of time and resources but certainly doable. The main question is whether we will succeed to complete it before someone develops a quantum computer able to break the current cryptographic keys. Let me note though that your personal/private data will not be the primary target but large public and private institutes are more concerned and actively working in developing the tools to tackle this risk.

Finally, I would like to ask you which are key skills needed for those considering to enter this field?

There are so many skills you could use for this field that is difficult to answer your question. Physicists have some pretty good intuition about quantum mechanics and they learn about quantum computers and quantum information so they should be able to contribute. Just being smart and passionate are two key skills to succeed in this field.

Notes:

[1] Bennett, Bessette, Brassard Salvial, and Smolin "Experimental Quantum Cryptography", J. of Cryptology 5, 3-28 (1992)

[2] Bernstein, E. and Vazirani, U., “Quantum complexity theory”, Proceedings of the 25th Annual ACM Symposium on Theory of Computing, 1993, pp. 11 – 20.

[3] D. Simon (1994), On the power of quantum computation, in Proceedings of the 35th Annual Symposium on Foundations of Computer Science, IEEE Computer Society Press, Los Alamitos, CA, pp. 116–123.

[4] Chuang et al., (2001) Experimental realization of Shor's quantum factoring algorithm using nuclear magnetic resonance: https://www.nature.com/articles/414883a

[5] Short. P (1995) Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer, SIAM J.Sci.Statist.Comput. 26 (1997) 1484. DOI: 10.1137/S0097539795293172

[6] "RSA" was developed in 1977 by the mathematicians Ronald Rivest, Adi Shamir and Leonard Adelmann (hence the acronym). It makes use of the fact that factoring a number is so-called one-way function. This means that while it is very easy to make a large number from smaller ones, it takes much longer to find all the factors of a large number. This time factor is the basis for the security offered by many encryption methods. Using Shor's algorithms, factoring large numbers on a quantum computer would be just as fast as multiplication. "RSA" and other procedures would no longer be safe. Experts have been making reassuring noises, since a lot of work remains to be done before such computers can even be constructed, but cryptographers are already working on the next generation of encryption techniques. [Source: American Mathematical Society - AMS.org]