Geant4: a modern and versatile toolkit for detector simulations

Like a good, lifelong friend who is accompanying you through all phases of life, the Geant4 detector simulation toolkit is widely used in particle physics experiments from their conception to their design, during construction and data taking, for physics analyses, until several years after the experiment's last run.

This is currently the case of the LHC experiments and of the detailed design studies for coming or proposed experiments - from neutrino detectors, through fixed-target set-ups, to future lepton and hadron colliders. Simulations encompass all the knowledge we have accumulated so far regarding the physics properties of particles - e.g. what to expect from the collisions of two protons at the LHC, and from the passage of the hundreds of secondary particles produced at colliders through matter when traversing complex detectors such as ALICE, ATLAS, CMS and LHCb.

With simulations we can compare different detector prototypes, like a crystal electromagnetic calorimeter versus a sampling one, or various implementations of the latter (e.g. types of absorber and active elements, calorimeter depth, sampling fraction, etc.) before their actual realization, thus saving money and time.

Once a detector is built and physics data are taken, simulations play a major role in understanding the data, estimating the backgrounds, suggesting selection and analysis strategies, evaluating corrections, acceptances, efficiencies and assessing the systematic uncertainties of measurements. It should therefore not come as a surprise that a significant part of the (systematic) error of the experiments' results depends on the simulation. Both the accuracy - i.e. how well the simulated events resemble the real ones - and the speed of the simulation - related to the number of simulated events that can be generated - are thus crucial.

In HEP colliders, the simulations are the results of two, different stages. The first one is the simulation of the collisions between two beam particles (e.g. between two protons at LHC), made by a Monte Carlo event generator; the second stage starts from the particles produced in the first stage and consists of the transportation of these particles through the detector by a Monte Carlo transport engine, such as Geant4 (from the beam pipe to the muon chambers, typically the outermost part of the detector). In other words, the MC event generator plays the role of a virtual collider, whereas the MC detector simulation plays the role of a virtual detector. Notice that both stages are based on "Monte Carlo" simulations, i.e. on drawing random numbers to reproduce the expected (from theory and phenomenology) distributions.

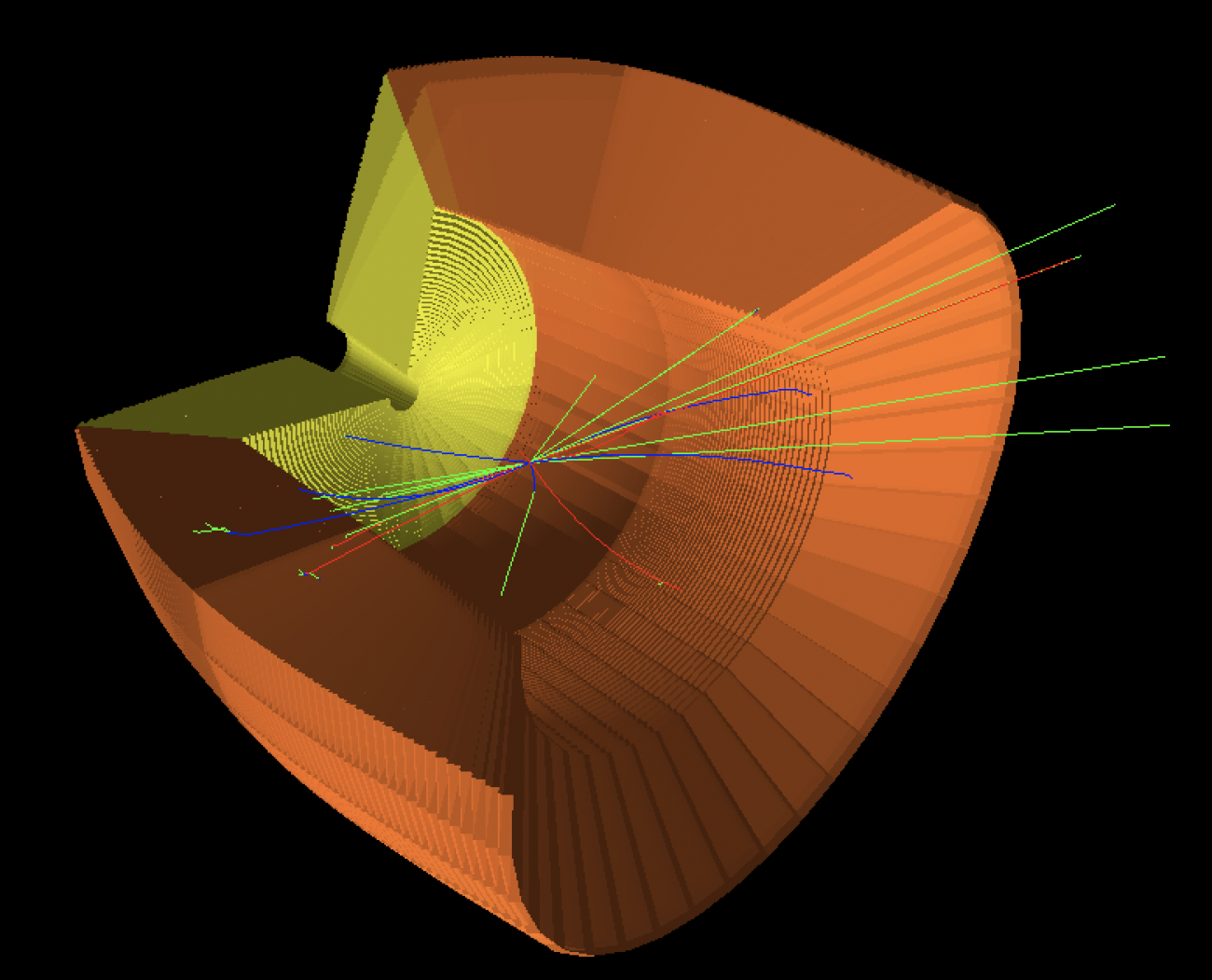

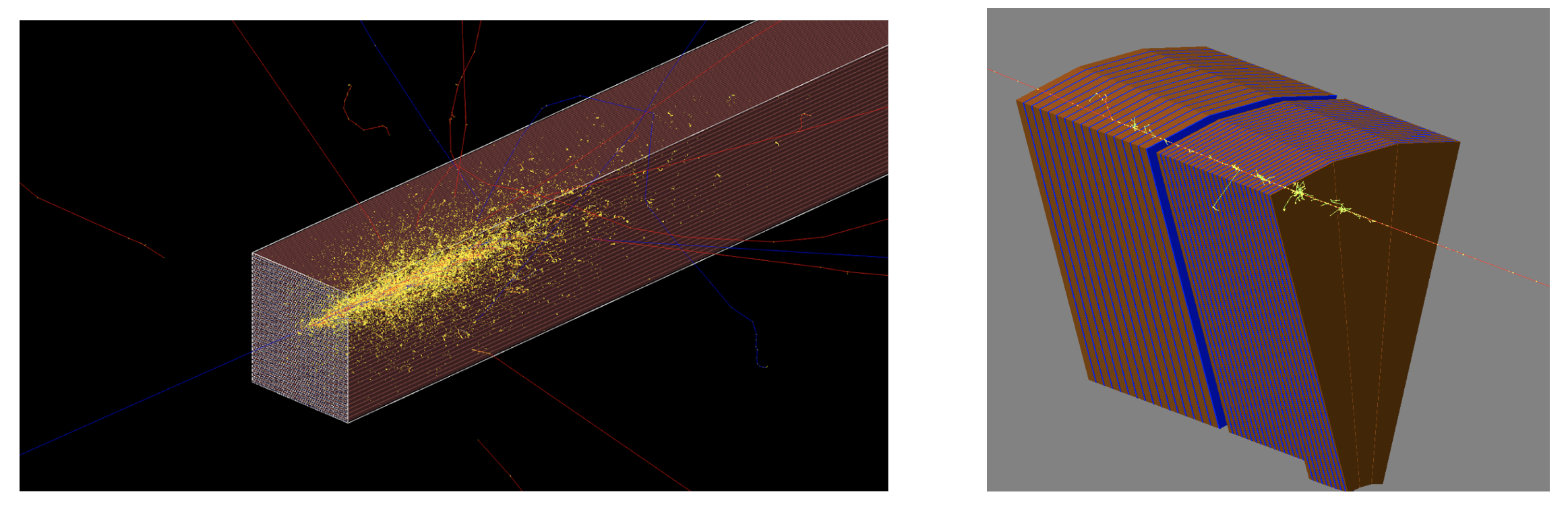

While MC event generators can be used regardless of the detector type, different experiments rely on detailed custom-made simulations of their detectors. MC transportation codes like Geant4 allow any kind of detector to be described from a set of general geometrical components. It is a bit like using the same lego bricks to build different structures. Moreover, a large variety of materials can be assembled from elements, and then be associated to particular detector components. Finally, the modelling of physics interactions of particles in matter can be utilised for any type of detector simulation. Geant4 is a toolkit consisting of nearly two million lines of code written in object-oriented C++ by an international collaboration of physicists, computer scientists, mathematicians and engineers over the last three decades. The figures below are graphical representations of realistic detector simulations performed with the Geant4 toolkit: the left one represents a positron-induced shower in a longitudinally unsegmented fiber calorimeter; the right one illustrates the passage of a muon through a wedge-shape sampling calorimeter.

Figure 1: Graphical representations of realistic detector simulations performed with the Geant4 toolkit. Left: A 20-GeV-positron-induced shower in a longitudinally unsegmented fiber calorimeter. Individual energy depositions in fibers, the so-called hits, are marked as yellow points, while blue and red tracks correspond to positively and negatively charged particles. Right: A 10 GeV muon passing through a wedge-shaped sampling calorimeter made of alternating copper and liquid argon sections. The low-energy-radiated photons and electrons from the muon track are clearly visible.

Although high-energy physics applications have been a primary goal from the outset, Geant4 is a multi-disciplinary tool, widely used in space science, medical physics, nuclear physics and engineering. A huge effort is devoted to testing and validating the toolkit. This is absolutely necessary to guarantee the quality of simulations, in particular in critical areas such as physics analyses, design of new detectors, spacecraft, medical devices and treatments. Most of these testing and validation activities are carried out when new releases of the Geant4 software are prepared, once per year; a restricted subset of these verifications are executed when new patches of supported versions are released, as well as during monthly development snapshots of the toolkit. Moreover, hundreds of tests are run each night to decide whether to include proposed changes into the official Geant4 code; a subset of these are also run immediately whenever a new change is proposed, to provide a prompt feedback to the authors in the case of compilation warnings, run-time crashes or anomalous behaviours.

Different types of tests are deployed: some of them are meant to simply check that simulations run smoothly until completion, others monitor the execution speed or the memory footprint of simulations. Reproducibility tests verify whether is possible to reproduce one event, starting from the initial random-generator status of that event, without also running the previous events, which is needed to debug rare anomalous situations. Regression tests are meant to compare the results of the same simulations between two versions of Geant4 software, with the goal of understanding the observed differences in terms of code changes occurring between these two versions. The ultimate and most important category of tests is the one of physics validation, in which the simulation accuracy can be assessed by comparing against experimental data. There are two kinds of physics validation tests: thin-target and thick-target tests.

The former ones are made of thin layers (with typical thicknesses of a few millimetres) of target materials which are impinged by projectile particles of well-defined energies, and the secondary particles that are produced by single interactions are measured. These data are used by the developers of physics models first to tune the free parameters of their models, and then to guide the development of these models to reduce the remaining disagreement between simulation and experimental data.

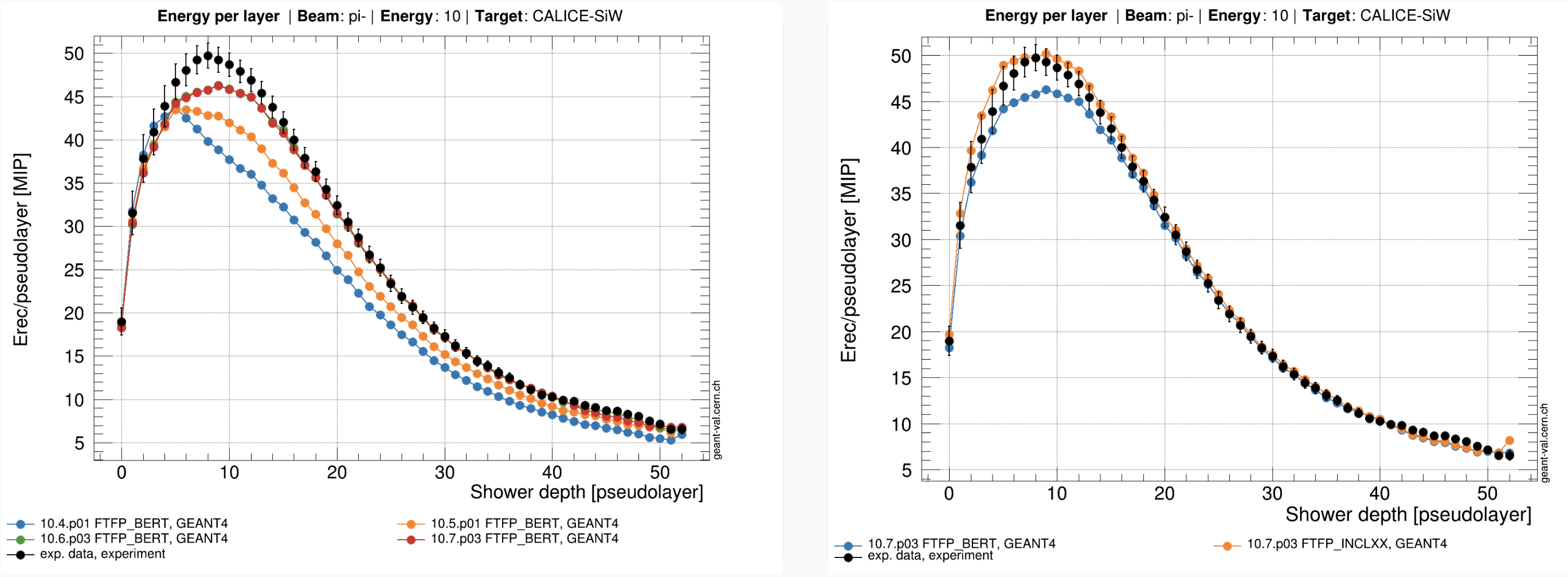

The thick-target tests, most of which consist of calorimeter test-beam set-ups that aim to measure the properties of electromagnetic and hadronic showers, are the results of a large number of physics interactions, involving different types of particles at various energies - typically from hundreds of GeV down to the keV scale. The comparisons between these data and simulations allow to assess the overall behaviour of simulations, and to identify a complete set of physics models (named "physics list" in Geant4 jargon) which provides a reasonable compromise between simulation accuracy and speed for specific application domains.

Over the years, many tests have been developed and utilised by various developers and collaborators, for different purposes and targeting diverse application areas, with a spread of choices in terms of output formats and accessibility of the results. Over the last few years, a more organized effort is underway with the aim of collecting all Geant4 tests and the experimental data used for physics validation in one, single and common testing suite, geant-val. The idea is to store the results of the tests into a database, and to offer a modern web interface that allows retrieving, displaying and comparing these results at will for both developers and users of Geant4. Recently, we have started a new effort, in collaboration with experimental groups, to port into geant-val simulations of calorimeter test-beam set-ups and their data. The motivation is twofold: on one hand, we preserve these precious data for physics validation, in particular for electromagnetic and hadronic showers, which are critical for HEP experiments; on the other hand, we let the developers of physics models benefit from these data each time they desire so, for instance after changing a model, or after re-tuning a model's parameters.

Figure 2. Longitudinal energy profiles reconstruction with Geant4 from charged-pions-induced showers in a highly-segmented silicon-tungsten calorimeter. Left: Geant4 predictions of several versions released from 2017 to 2020, to perform the regression testing of the Geant4 recommended (for HEP applications) set of physics processes, the FTFP_BERT Physics List, indicating a good improvement in the recent physics modeling. Right: Comparing the FTFP_BERT Physics List prediction with the one from the (experimental) FTFP_INCLXX Physics List for which the data-to-MC tension at the longitudinal shower maximum is largely restored.

The figure above is a good example of Geant4 validation on thick targets: it shows the longitudinal energy profile of charged-pion-induced showers in a highly-segmented silicon-tungsten calorimeter. The left plot illustrates the Geant4 predictions for several versions for the same set of physics process; the right plot compares two different physics lists.

In the present era of precision tests of the Standard Model - which will reach its climax during the HL-LHC - and of intense design studies for the next high-energy collider, simulations are going to play an increasingly key role, with stringent requirements on both their two main components, Monte Carlo event generators and detector simulations. In this context, Geant4 is required to remain a trustable, reliable and stable toolkit for detector simulations, while, at the same time, to undergo major improvements and evolutions in both physics accuracy and computational performance, in a constantly changing and heterogeneous hardware and software environment.

To achieve these seemingly contradicting requirements, it is essential to rely on a rich and diverse testing and validation suite, while exploring different ideas and a variety of possible solutions, concentrating the effort on the most promising directions, and rapidly integrating in the toolkit the approaches that demonstrate clear benefits. The much bigger datasets of the LHC experiments expected during the high-luminosity phase require larger (i.e. with more events) and better (i.e. more similar to real events) simulation samples.

To address these demanding requirements, simulation experts are working in three directions. First, improving both the accuracy and speed of detailed, full fidelity simulations by reviewing the algorithms and optimizing the code. Second, developing better and new fast, lower-fidelity simulations, such as the traditional parametrised shower and shower library techniques, as well as novel approaches based on Machine Learning. Third, exploring new computing architectures and accelerators, for instance trying to offload to GPUs the detailed simulations of electromagnetic showers in calorimeters, which take a significant fraction of the simulation time.

Besides these physics (i.e. more accurate simulations) and computational (i.e. more simulation events produced for a given computing budget) challenges, we are facing also a major human challenge, with many of the key Geant4 developers approaching retirement, and therefore the need of finding, training and ensuring stable positions for a new generation of young, motivated and talented simulation developers.